Evidence Based Practice and the Knowledge Transfer Problem

Within EBP, knowledge transfer refers to the adoption and usage of evidence — produced through clinical research — by clinical practitioners to influence their clinical decisions and actions.

Challenges to successful knowledge transfer largely center on two influential factors.

- Decisions/(in)actions effected by practitioners.

- Decisions/(in)actions effected by clinical researchers.

In gross overview, we can reduce our contribution to the knowledge-transfer challenge by addressing three issues.

- The research questions we take on.

- The richness of the information we return to clinicians.

- How we communicate our findings.

Caveat: Addressing these three issues likely plays out in a program of research, rather in a single study.

The focus of this presentation is optimizing the decisions/actions of clinical researchers to increase the utility of their experiments for directly informing clinical practice.

The presentation is based upon, and draws heavily from, Sudsawad (2005).

Diffusions of Innovations Theory: Five Factors Influencing the Communication of New Information

Adapted from Rogers (2004) per Sudsawad (2005) .

Diffusion of Innovations Theory was not designed for passing information from clinical research to clinical practice, but it applies with little adaptation.

The presentation of the five influential factors affecting the adoption of new information reflects that adaptation.

Relative Advantage

The degree to which a study produces superior evidence compared to what is presently available.

- Relevance

- Validity

Compatibility

The degree to which new evidence is anchored in past experience, existing values, and current needs.

- New evidence meets the current needs of clinical practitioners

Complexity (aka Understandability)

The degree to which research findings are presented as consumable/interpretable by practitioners.

- The clinical applicability is made clear.

- Limits on the clinical applicability are made clear.

- The valid use/implementations of the intervention is made clear.

Trialability

The degree to which an intervention is immediately accessible.

- The intervention can be implemented easily in clinical practice.

- A clinician can easily ‘try out’ a protocol (appropriately and on a limited basis).

- The valid use/implementations of the intervention is made clear.

Observability

The degree to which a new or improved clinical intervention is perceived by practitioners as superior to what they are currently doing. E.g.

- A demonstrably better clinical outcome at an acceptable cost.

- A demonstrably equal outcome at less cost, or in less time, than status quo ante.

Factors That Affect the Usefulness of Clinical Research Outcomes in Clinical Practice

Once again, this section is adapted from Sudsawad (2005).

Clinical Relevance

Clinical Relevance

- To what degree will the results of a clinical experiment apply directly and immediately to clinical practice?

- To what extent will the results of a clinical experiment correspond to a need perceived by clinicians?

- What is the degree of correspondence between the research question and a clinical question?

- How clinically meaningful is the research question?

- How ‘usable’ will be the obtained results in clinical practice?

- How valuable will clinician’s perceive the resulting evidence for changing clinical practice patterns?

Conclusion: The greater is the clinical relevance of the research question, the more influential will be the resulting scientific evidence for directly informing clinical practitioners.

Possible strategies for optimizing the clinical utility of the evidence you will produce.

Consult with practitioners to determine …

- pressing needs for clinical protocols

- pressing needs in terms of clinical (sub)populations

- the most needed forms of outcome data

- the most needed form of service delivery setting

Form a focus group of clinicians to discuss variations of a research question.

Monitor practice-oriented listserves.

The effort here will address Roger’s relative advantage factor.

Social Validity

Social validity is multidimensional and each dimension is a continuum (Foster & Mash, 1999).

Inside the clinician-client dynamic: Direct consumers (Foster & Mash, 1999)

Patient; direct consumer

- Is the process and costs of the intervention accessible and acceptable to clients (Wolf, 1978)?

- Are clients satisfied with observed outcomes (Wolf, 1978)?

Clinicians

- Can caregivers make the intervention accessible?

- Are clinicians satisfied with observed outcomes (Wolf, 1978)?

Outside of the clinician-client dynamic: Indirect consumers (Foster & Mash, 1999)

Members of the immediate community. Are members of the immediate community satisfied with observed outcomes (Wolf, 1978)? E.g., …

- Other caregivers

- Teachers

- Classmates

- Colleagues

- Friends

Members of the extended community (society)

- Is the goal of the intervention under test in this experiment valued by society (Wolf, 1978) ?

- Will it produce outcomes (however the experiment turns out) that are valued by society and the policy makers who act on behalf of society?

Summary

- Is the goal of the clinical intervention being tested relevant and valued by stakeholders?

- Is the means for achieving that goal (the clinical intervention) acceptable to consumers?

- Are consumers satisfied with the outcome?

Conclusion: Social validity helps a practitioner decide the feasibility of a protocol as well as what values accrue to whom.

Possible strategies for optimizing the clinical utility of the evidence you will produce.

- Consult with practitioners to determine realistically feasible clinical protocols for the target setting

- Consult with direct and indirect consumers to determine the outcomes they need/value

- Consult with direct and indirect consumers to determine what constitutes meaningful changes in activities of daily communicative function.

- Plan to assess customer satisfaction re. point above

The effort here will address Roger’s compatibility and trialability factors.

Ecological validity

“… the functional and predictive relationship between a person’s performance on a test and his or her performance in a variety of real world settings.” Sudsawad (2005)

The degree to which an outcome measure corresponds to, represents, captures, predicts communicative behavior in natural settings.

Conclusion: An “outcome measure that has no direct link to, or is not supplemented by, real-world performance can be perceived as less meaningful and less relevant by” practitioners. Sudsawad (2005)

Possible strategies for optimizing the clinical utility of the evidence you will produce.

- Plan to measure functional change

- Plan to measure participation restriction

- Plan to measure HQOL

- Plan to assess the perceptions of SOs

- Plan to assess the perceptions of members in the ‘immediate community.’

- Assess moderator variables and their effects on outcomes

- Write to optimize communication with practitioners regarding the clinical utility of your findings.

The effort here to set establish a linkage between the experiment and the real world will address Roger’s understandability/complexity factor.

Significance

Three forms of significance:

- statistical significance,

- practical significance, and

- clinical significance

(Thompson, 2002; Ogles et al., 2001)

Statistical Significance

This is the process and products of hypothesis testing logic

- Reject or fail-to-reject H0

- Setting 1-ß

- Managing nominal α

- Determining n

- Reporting an exact probability

Practical Significance

Practical significance is an interpretation of data using point and interval estimates of effect size (rather than p and a). It is not, clinical significance.

The central issue is estimating the degree of separation (the degree of departure from the null state) rather than the dichotomous outcome of reject or fail to reject.

“… knowing that A is greater than B is not enough.” (Kirk, 1996, p.754)

Kirk’s exposition concerned the principal dependent variable in any form of behavioral experimentation.

PS is an alternative for, or supplement to, hypothesis testing logic and statistical significance.

“…to determine whether a result is useful in the real world.”

Clinical Significance: Overview

“Clinical significance refers to the practical or applied value or importance of the effect of an intervention —

that is, whether the intervention makes a real (e.g., genuine, palpable, practical, noticeable) difference in everyday life to the clients or to others with whom the client interacts.” (Kazdin, 1999, p. 332).

“Clinical significance focuses on the importance or the implied value of change in everyday life.”

Clinical significance is a multidimensional (multivariate, multifaceted) construct.

Kazdin’s dimensions

- Degree of symptom change

- Reliable Change Index (RCI)

- MCID plus ES plus CI

- Normative comparison — z-scores obtained through regression

- Meeting one’s role demands

- Functioning in everyday life

- Improved HQOL

- Perceived change versus actual change

- Large change may well be important

- Any amount of change, or even no change, may be perceived as meaningful, and even life changing.

- In some cases, large change, or even some change, is not possible or practical. Learning to cope with the condition may be perceived as an important benefit that improves QOL.

- Social significance

Conclusion: Statistical significance is necessary, but not sufficient for EBP (nor for any other application for that matter).

Possible strategies for optimizing the clinical utility of the evidence you will produce.

- Select outcome measures that assess activity limitation, or participation restriction, or HQOL and reflect real-world status.

- Define MCID

- Select one or some of the procedures described in this section (or something else) to quantify meaningful change.

The effort here will address Roger’s observability factor.

Clinical Significance: Reliable Change Index (RCI)

RCI: Recovery

Clinical significance is returning to normal functioning (Jacobson, Roberts, Berns, & McGlinchey, 1999).

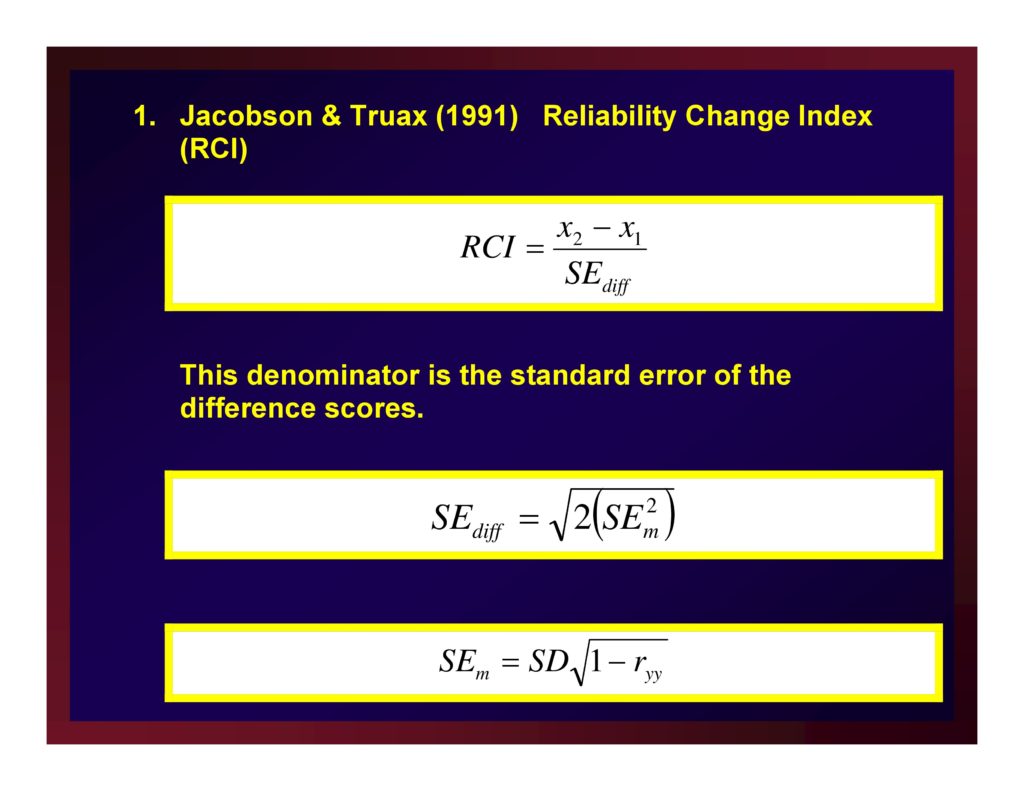

Jacobson & Truax (1991) Reliability Change Index (RCI)

This denominator is the standard error of the difference scores.

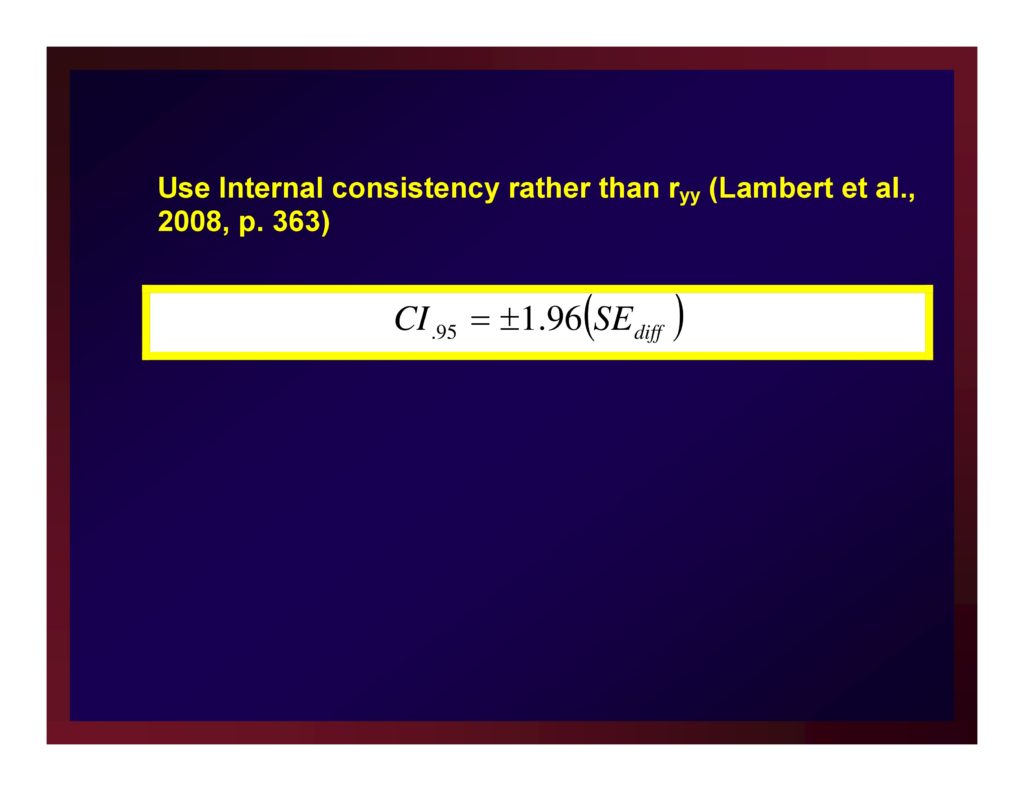

Use Internal consistency rather than ryy (Lambert et al., 2008, p. 363)

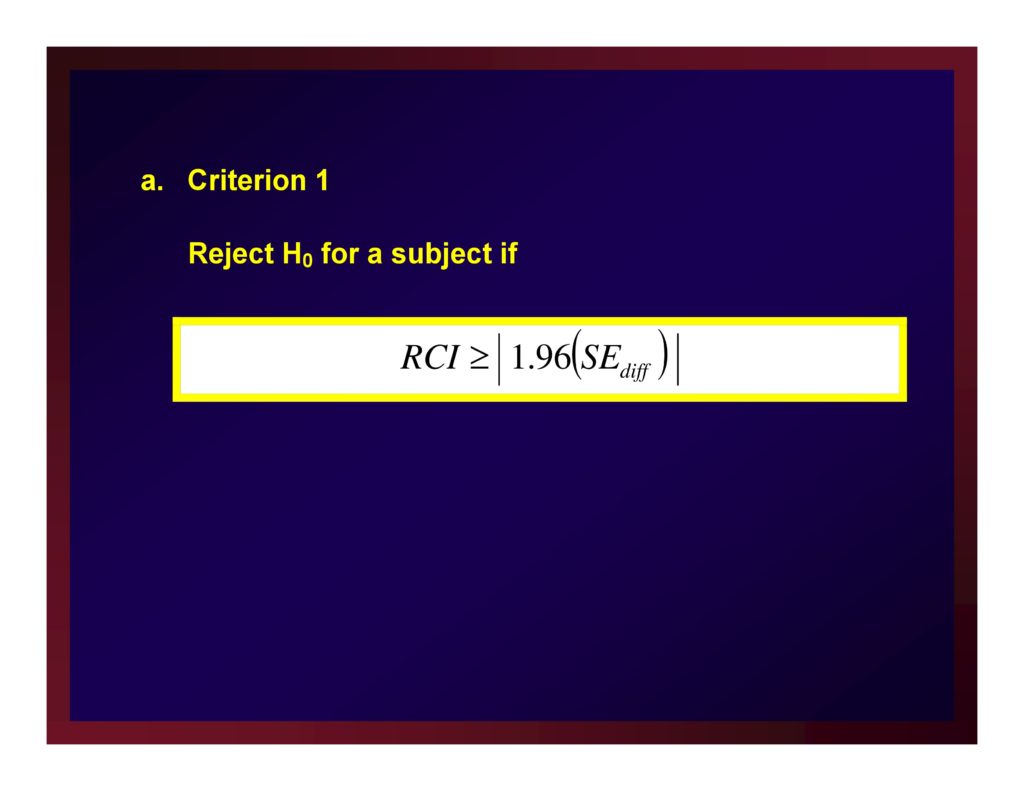

Criterion 1

Reject H0 for a subject if RCI ≥ | 1.96(SEdiff) |

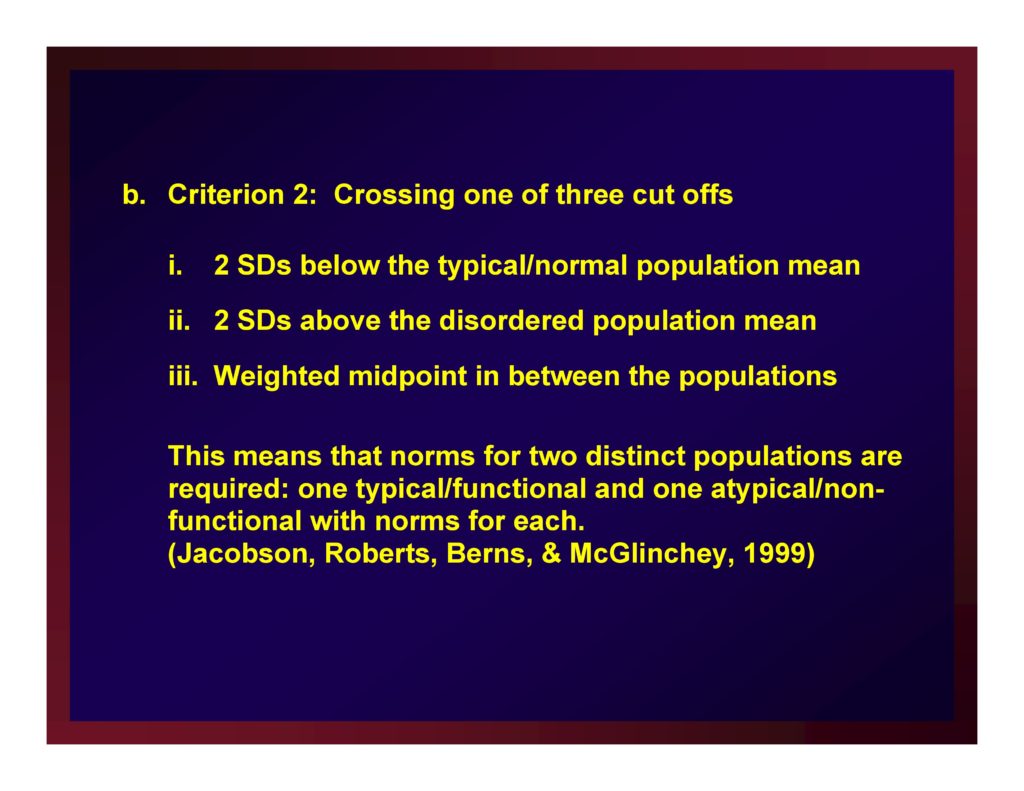

Criterion 2: Crossing one of three cut offs

- 2 SDs below the typical/normal population mean

- 2 SDs above the disordered population mean

- Weighted midpoint in between the populations

This means that norms for two distinct populations are required: one typical/functional and one atypical/nonfunctional with norms for each. (Jacobson, Roberts, Berns, & McGlinchey, 1999)

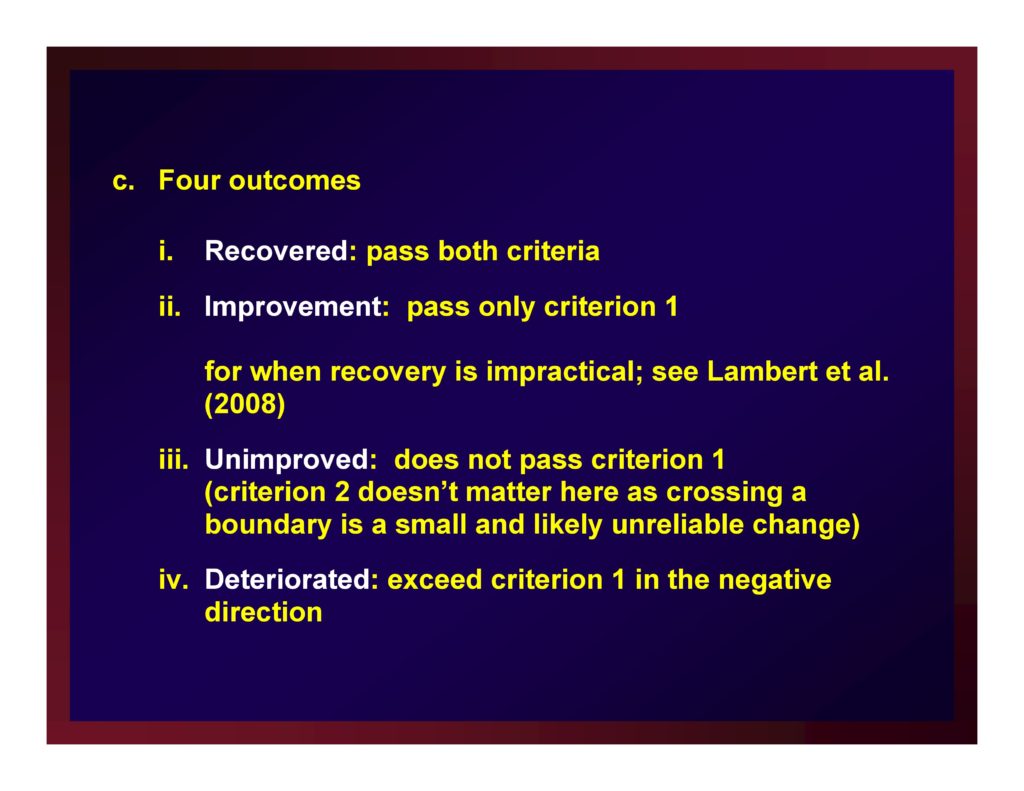

Four outcomes

- Recovered: pass both criteria

- Improvement: pass only criterion 1 — for when recovery is impractical; see Lambert et al. (2008)

- Unimproved: does not pass criterion 1 (criterion 2 doesn’t matter here as crossing a boundary is a small and likely unreliable change)

- Deteriorated: exceed criterion 1 in the negative direction

Several “enhancements” have been proposed. Studies of obtained values for Jacobson & Truax and all competitors applied to many data sets conclude that the algorithms produce similar findings and none is simpler that JT.

Tingey, et al, (1996) published a relaxed criterion for assessing “reliable improvement” when recovery is not possible.

Clinical Significance: MCID plus Effect Size plus CIs

Minimal Clinically Important Difference (MCID)

Man-Son-Hing, et al. (2002) advanced the notion that not every statistically significant difference (proportion, correlation, etc.) is important.

Although the units-of-measure for Man-Son-Hing, et al. were descriptive statistics (rather than estimates of effect size), they also understood that all interpretations of experimental results are local.

On the basis of existing literature, a researcher must determine a criterion that a new result must exceed to be considered clinically significant: MCID

Adapting Man-Son-Hing, et al. by making the leap from mean differences to differences in effect sizes renders MCID practicable.

Three Different Examples of MCID

No intervention is available for a certain debilitating condition.

Any improvement, no matter how small relative to a no-treatment control, represents an important advancement in managing the condition.

In this case, obtaining a value of say d ≥ .10 could very well constitute an important difference.

An intervention protocol is broadly recognized as a clinical standard for care and is known to effect a level of change corresponding to an average effect size of d = .80 (i.e., an average effect size in comparison with no-treatment control studies).

A new technology is introduced as an alternate form of care but only at substantial cost in making the change from one technology to another.

The cost is deemed worthwhile if the new technology improves outcomes by at least 25%.

All other things remaining constant, an outcome of d = .20 is an important one in an ANCOVA of data obtained through a parallel-groups design contrasting the new technology and the old technology.

Consider the same situation but one in which the new technology achieves the same level of change as the old technology but at a substantially faster rate and substantially reduced cost.

In this case, d = 0.00 is an important outcome using the same research design.

That is, the new technology achieves the same outcome as the standard but in less time and at less cost.

The analysis in this case would be supplemented with equivalency testing.

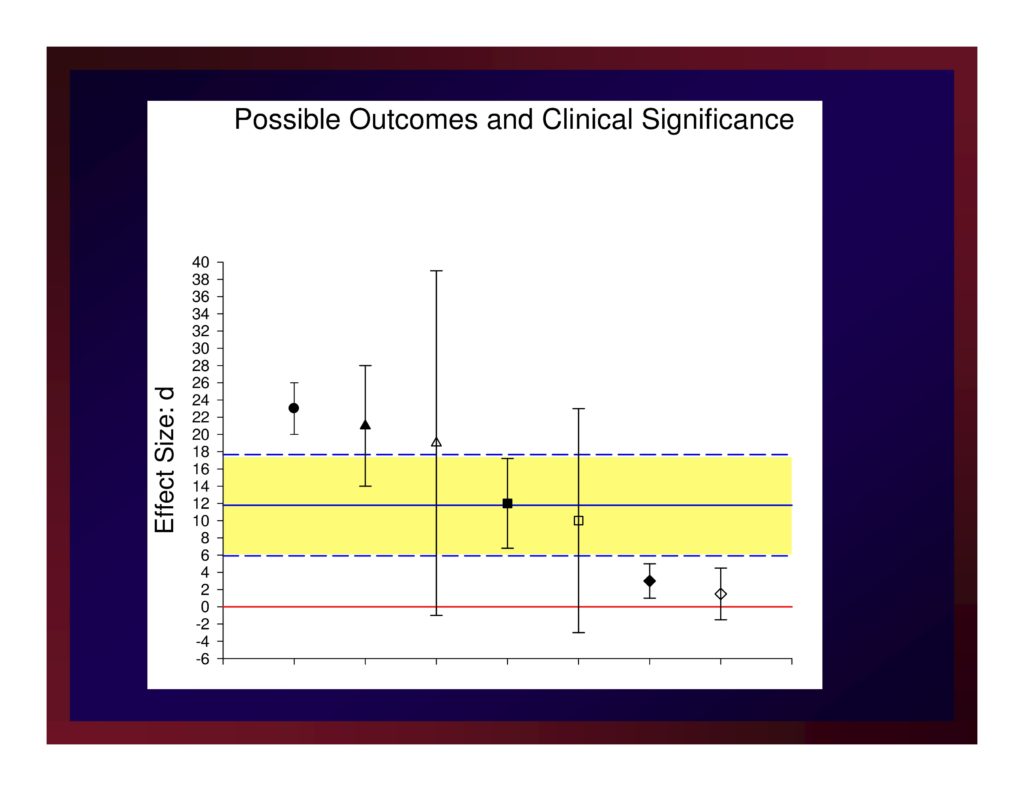

A new treatment protocol will be considered an important advancement if if produces an estimate of effect size that exceeds the average effect size of the treatment studies testing competing protocols.

That same new treatment will be considered very important if it produces and estimate of effect size that equals or exceeds the upper boundary of the confidence interval about that average effect size.

Example: Single-Subject Data

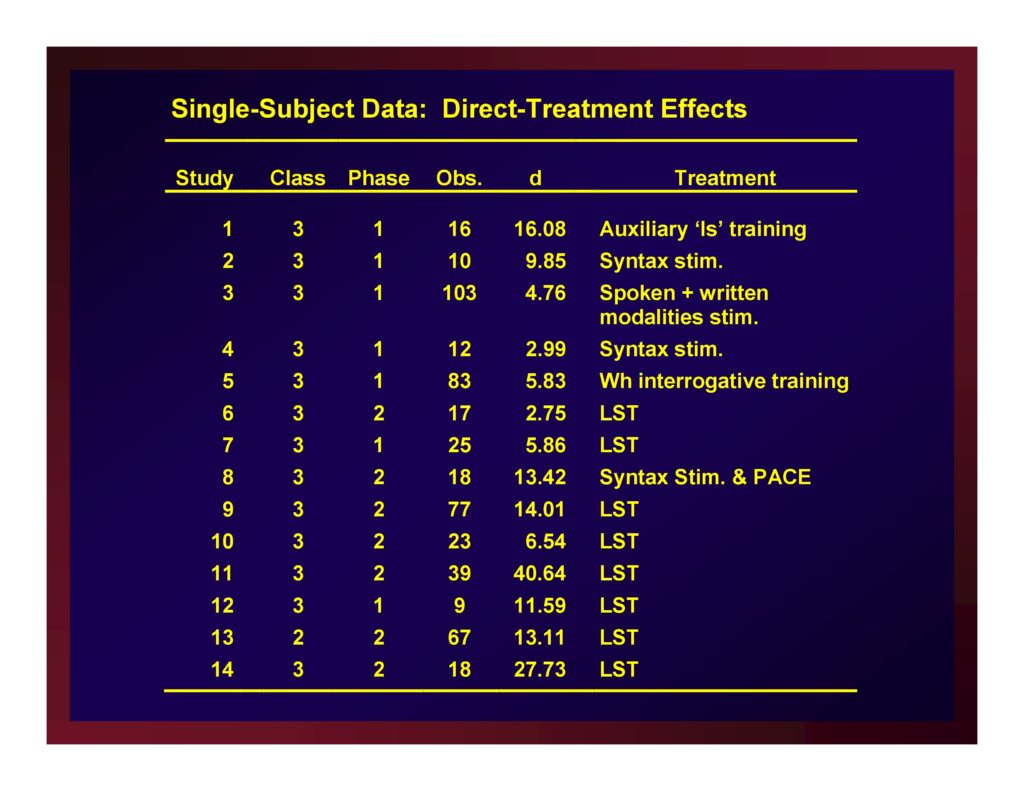

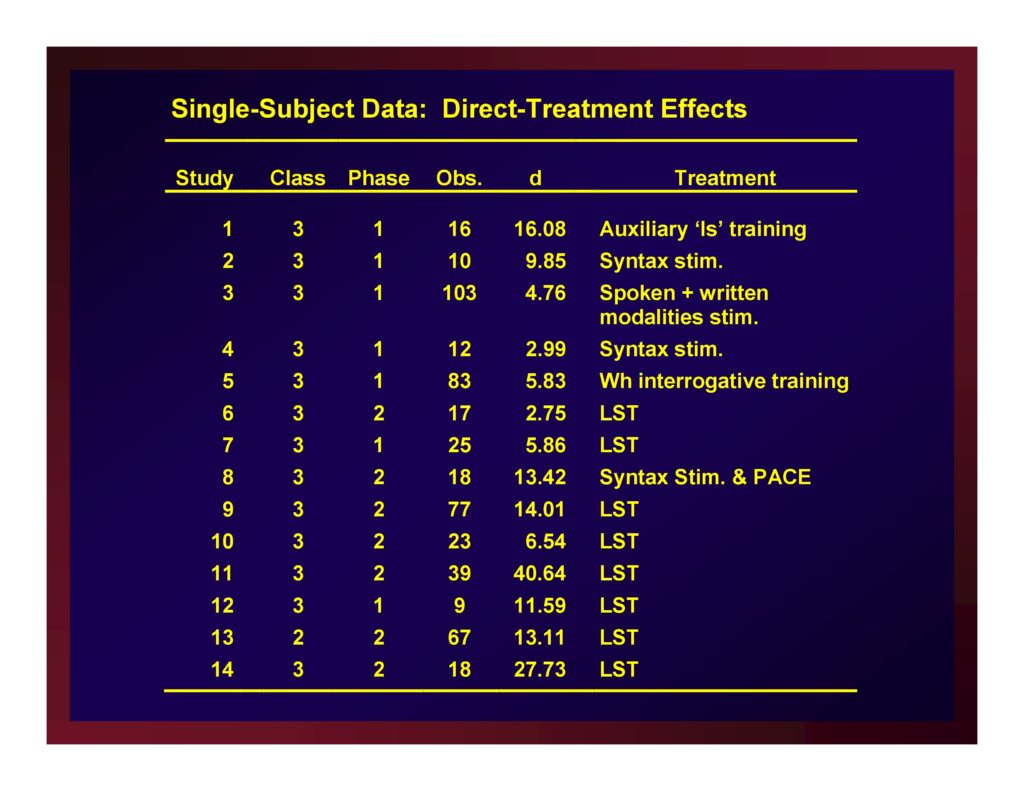

Single-Subject Data: Direct-Treatment Effects

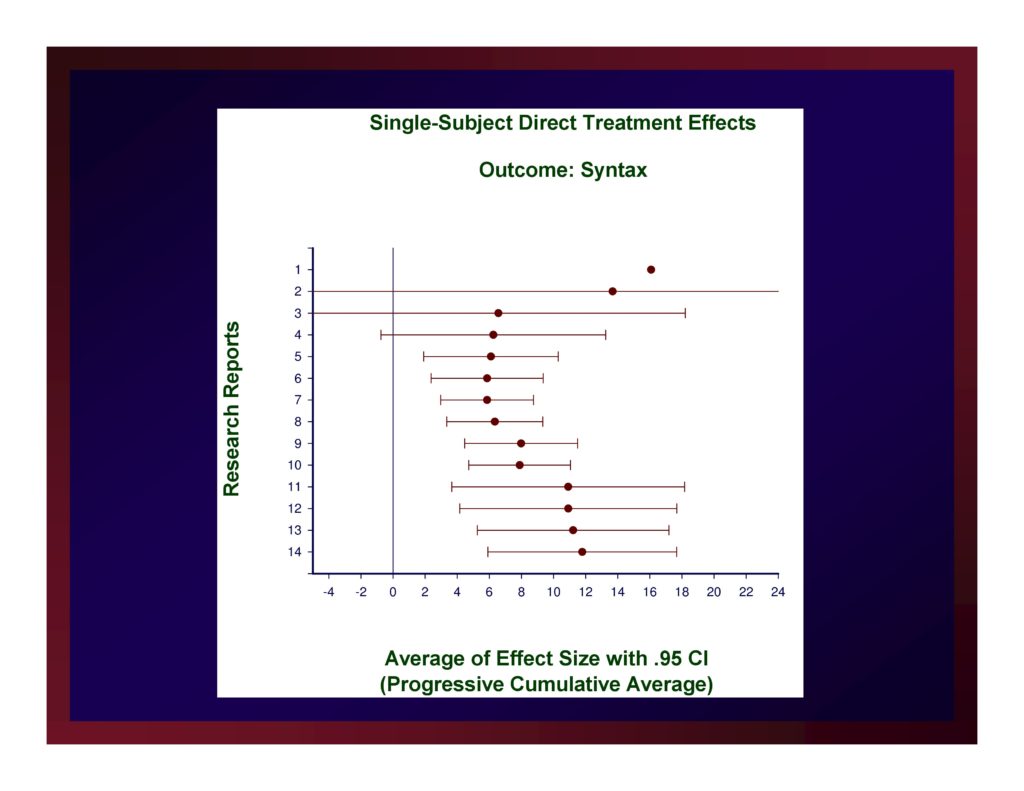

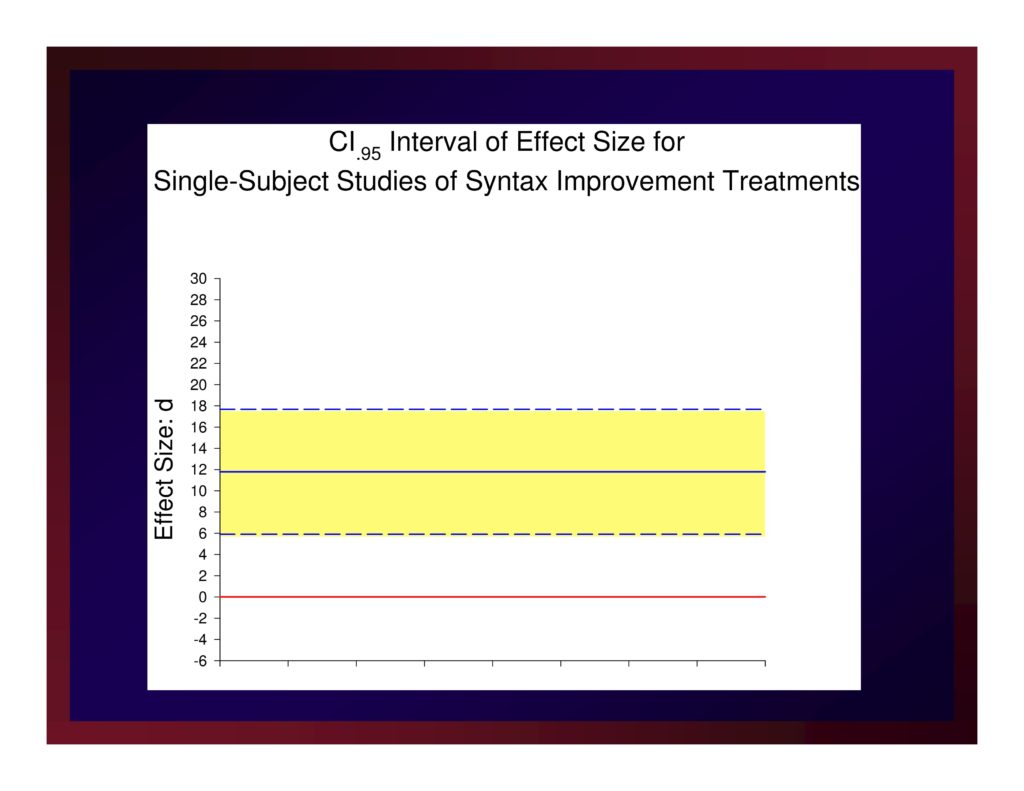

Single-Subject Direct-Treatment Effects: Average of Effect Size with .95 CI

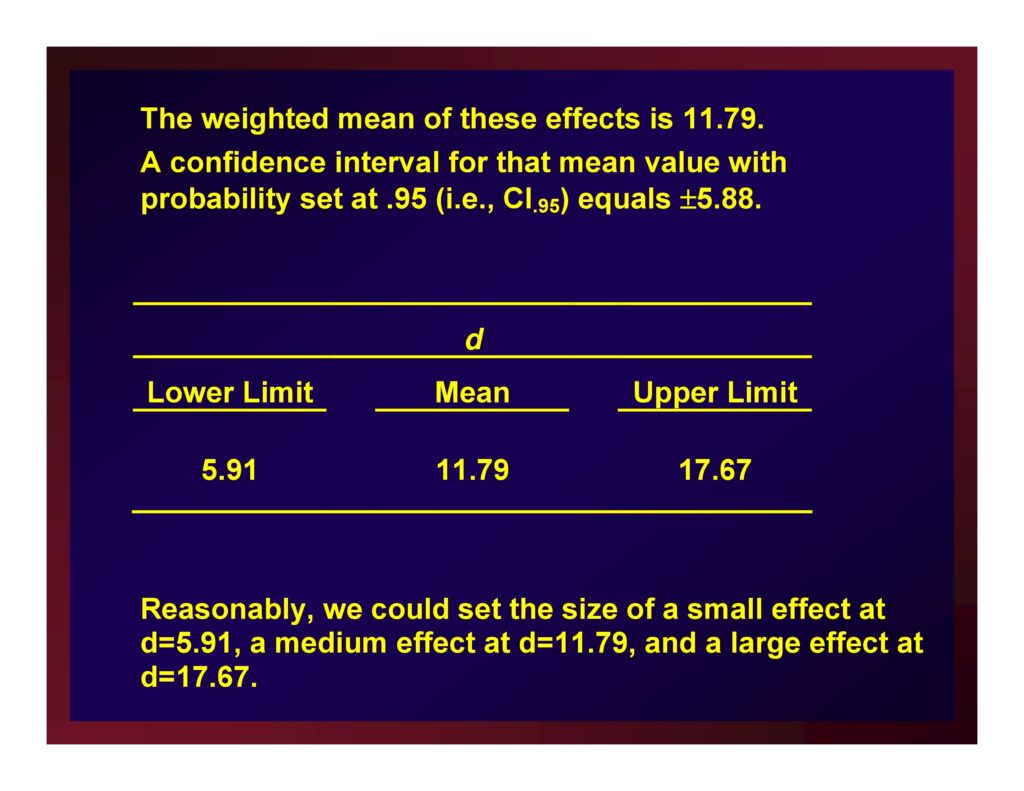

The weighted mean of these effects is 11.79.

A confidence interval for that mean value with probability set at .95 (i.e., CI.95) equals ±5.88.

Reasonably, we could set the size of a small effect at d=5.91, a medium effect at d=11.79, and a large effect at d=17.67.

CI.95 Interval of Effect Size for Single-Subject Studies of Syntax Improvement Treatments

Possible Outcomes and Clinical Significance

How do I obtain values for this mini meta-analysis?

If a meta-analysis has been published in your target literature, you’re golden.

If not, work with your statistician to obtain what you need.

Clinical Significance: Normative Comparison

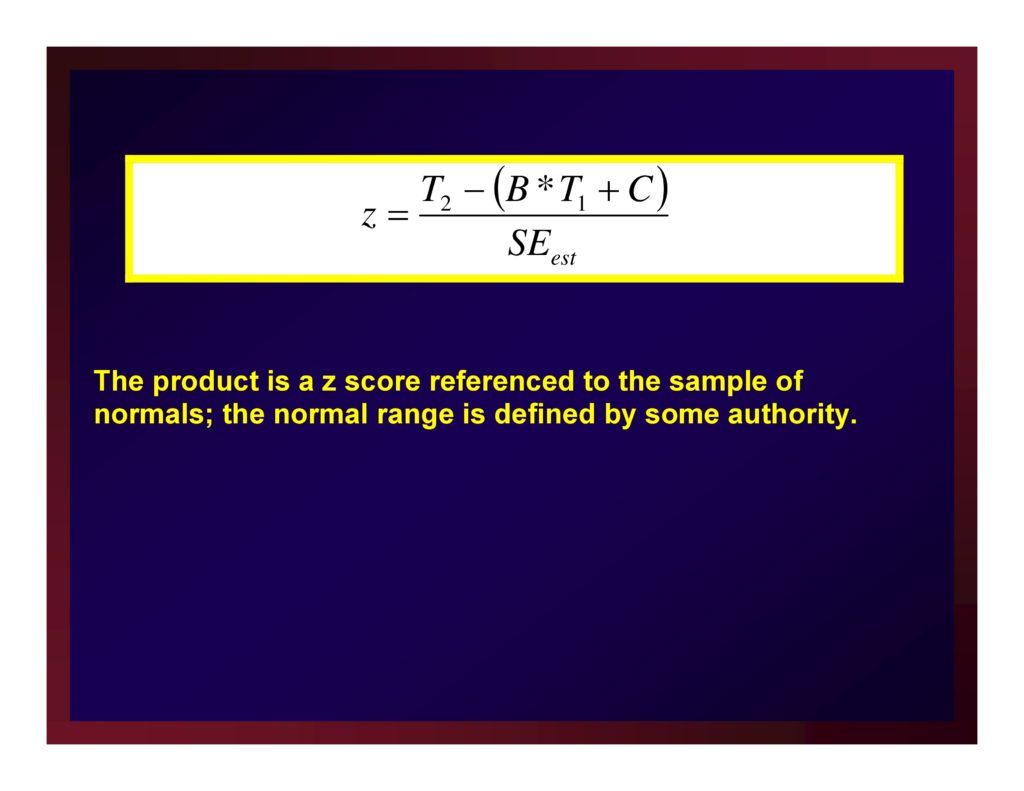

Regression based z scores Johnson, et al. (2006)

From a control sample, regress post-test scores (predictor) on pre-test scores (dep var).

From that analysis hold the value of B, the intercept, and SEest.

Take the time(1) and time(2) measures of an experimental participant and plug them into the regression equation

The product is a z score referenced to the sample of normals; the normal range is defined by some authority.

Conclusion

We are considering the term “intervention” in the broadest sense and so encompasses screening, diagnosis, prevention, treatment, counseling, and so forth.

Optimizing research conducted on clinical interventions for the purpose of informing clinical practice centers on four influential factors

- Clinical relevance

- Social validity

- Ecological validity

- Clinical significance

To the extent that clinical researchers can incorporate these factors in planning clinical experiments, we all win:

- clients,

- families,

- caregivers,

- the clinical professions, and

- the clinical sciences.

References

Foster, S. L. & Mash, E. J. (1999). Assessing social validity in clinical treatment research: Issues and procedures. Journal of Consulting and Clinical Psychology, 67(3), 308 [Article] [PubMed]

Jacobson, N. S., Roberts, L. J., Berns, S. B. & Mcglinchey, J. B. (1999). Methods for defining and determining the clinical significance of treatment effects: Description, application, and alternatives. Journal of Consulting and Clinical Psychology, 67(3), 300 [Article] [PubMed]

Jacobson, N. S. & Truax, P. (1991). Clinical significance: A statistical approach to defining meaningful change in psychotherapy research. Journal of Consulting and Clinical Psychology, 59(1), 12 [Article] [PubMed]

Johnson, E. K., Dow, C., Lynch, R. T. & Hermann, B. P. (2006). Measuring clinical significance in rehabilitation research. Rehabilitation Counseling Bulletin, 50(1), 35–45 [Article]

Kazdin, A. E. (1999). The meanings and measurement of clinical significance.. Journal of Consulting and Clinical Psychology, 67(3), 332–339 [Article] [PubMed]

Kirk, R. E. (1996). Practical significance: A concept whose time has come. Educational and Psychological Measurement, 56(5), 746–759 [Article]

Lambert, M. J., Hansen, N. B. & Bauer, S. (2008). Assessing the clinical significance of outcome results. In Nezu, A. M. & Nezu, C. M. (Eds.), Evidence-based outcome research: A practical guide to conducting randomized controlled trials for psychosocial interventions (pp. 359–378). New York: Oxford University Press.

Man‐Son‐Hing, M., Laupacis, A., O’Rourke, K., Molnar, F. J., Mahon, J., Chan, K. B. & Wells, G. (2002). Determination of the clinical importance of study results. Journal of General Internal Medicine, 17(6), 469–476 [Article] [PubMed]

Ogles, B. M., Lunnen, K. M. & Bonesteel, K. (2001). Clinical significance: History, application, and current practice. Clinical Psychology Review, 21(3), 421–446 [Article] [PubMed]

Rogers, E.M. (2004). Diffusion of Innovations (4th Ed.). Free Press.

Sudsawad, P. (2005). A conceptual framework to increase usability of outcome research for evidence-based practice. American Journal of Occupational Therapy, 59(3), 351–355 [Article] [PubMed]

Thompson, B. (2002). “Statistical,” “practical,” and “clinical”: How many kinds of significance do counselors need to consider?. Journal of Counseling and Development, 80(1), 64–71 [Article]

Tingey, R., Lambert, M., Burlingame, G. & Hansen, N. (1996). Assessing clinical significance: Proposed extensions to method. Psychotherapy Research, 6(2), 109–123 [Article] [PubMed]

Wolf, M. M. (1978). Social validity: The case for subjective measurement or how applied behavior analysis is finding its heart. Journal of Applied Behavior Analysis, 11(2), 203 [Article] [PubMed]