The following is a transcript of the presentation video, edited for clarity.

The other side of the formula. You think grantwriting is really torture, and you’re really having to create these new ideas and you’re this incredible generator. It’s actually much more formulaic than that at both sides. Reviewers are experienced enough that they can identify a common weakness a mile away. I can review grants now—I can tell you the score of a grant within decimals. The errors are predictable.

The other side of the formula. You think grantwriting is really torture, and you’re really having to create these new ideas and you’re this incredible generator. It’s actually much more formulaic than that at both sides. Reviewers are experienced enough that they can identify a common weakness a mile away. I can review grants now—I can tell you the score of a grant within decimals. The errors are predictable.

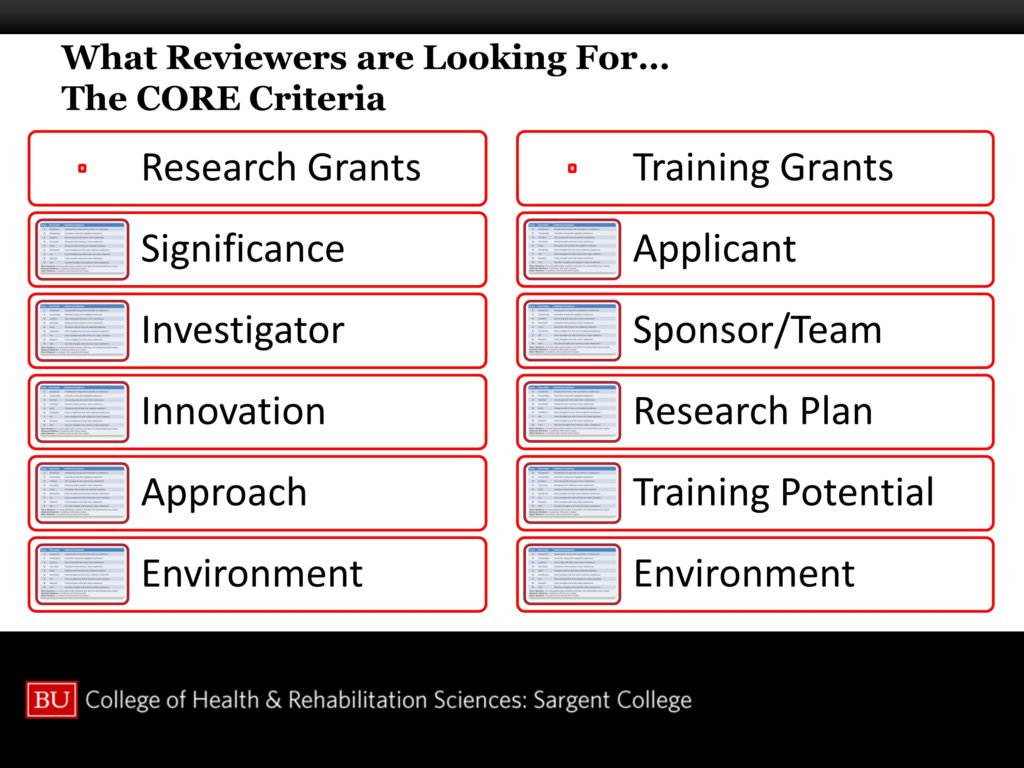

I’m going to go through the individual criteria, the major ones, not all of them, but the major ones.

Significance

Here are some of the strengths that will typically be identified.

Here are some of the strengths that will typically be identified.

The project addresses a critical barrier to the progress of the field.

Remember yesterday I talked about this idea of putting yourself in the stream of research. This is the part that says, yeah, you put yourself in this stream of research and this project is going to move that process ahead. Something will be advanced. It could be technical capability. It could be clinical practice. Usually it’s scientific knowledge. There will be some clear advance if the aims are achieved. It’s great if that can be if either the hypothesis or the null is supported, but in any case, this is going to make a contribution.

So the weaknesses, and I’ve touched on these already. Lacking or a weak theoretical framework comes up all the time. People get so close to their own work, they get so challenged by the technical details and by the really, really difficult minutia of the experimental design that they never step back and figure out what’s the theoretical framework for this thing. That has to be in there, it has to be explicit.

So the weaknesses, and I’ve touched on these already. Lacking or a weak theoretical framework comes up all the time. People get so close to their own work, they get so challenged by the technical details and by the really, really difficult minutia of the experimental design that they never step back and figure out what’s the theoretical framework for this thing. That has to be in there, it has to be explicit.

The aims have to be hypothesis-driven. It’s incredible how often the specific aims read without a hypothesis being anywhere on the page. It’s a big, big, big mistake. There has to be a hypothesis that is attached to, or at least suggests a clear experimental design.

The opposite of this is where you have something that’s driven by methods. Your department chair paid for some really expensive piece of equipment and a bunch of people, and you’ve got to use them, and this is what you know how to do with them, so that’s what you’re going to do. A lot of projects are like that.

Or they are population-driven: “I work in this clinic. I have this great clinical data set. I have access to this incredible population.” Then that’s the data set looking for a question. People will say that all the time, and it’s just disastrous. Motivated by where you are, not what needs to be known. Those populations exist, and if they’re so great, somebody would have gone and asked that, maybe. In our field, not necessarily so, there aren’t very many of us. Paucity of information, I should have said, is poor motivation.

Poor integration of the existing literature. It’s really bad if one of your reviewers is a major contributor to that literature, and you haven’t cited them at all. So, there’s the gratuitous citation, you want to avoid that of course. But to ignore somebody who is a major contributor in the area, that’s a big problem. Make sure you’ve covered the literature.

Then weak connection to human health—you have to make sure you acknowledge where you are in the continuum in improving human health. You need to be clear about it.

PI / Candidate / Research Team

Some people who review grants, the first thing they do is go to the biosketch and look at the PI. They’ll raise their hand and say, “You know, I don’t see this person’s really published enough.” Then the review is over. You can be very vulnerable to being weak on publications, weak with respect to your demonstrated expertise, weak in terms of the team. All of that stuff has to be there. This has to be the best team you can assemble to address this question. People are not really going to give you a big break.

That said, what you have to be is appropriate for your career stage. Nobody expects you to have had your Nobel Prize when you’re 25. But at 25 you should have a demonstrated trajectory. You have to have been productive or on a trajectory that says you’re going to be productive. You know what that means. You know what the high achievers in your cohort are doing. Hopefully that’s you. That’s the metric that you’re evaluated on.

A weak publication record, this shouldn’t be too disturbing. If your publication record is weak, there are other ways to get federal funding in your hands. The question here is whether your publication record is weak relative to the mechanism you’re targeting.

A weak publication record, this shouldn’t be too disturbing. If your publication record is weak, there are other ways to get federal funding in your hands. The question here is whether your publication record is weak relative to the mechanism you’re targeting.

If you had non-productive postdoc training. If you did a postdoc—if you went through the trouble of doing a postdoc and you didn’t have manuscripts come out of that, that’s a little disastrous. There are different ways to recover from that, but that’s a difficult thing. A postdoc is the only time in anyone’s career that you have 100% dedicated time for research. If you’re not productive at that opportunity when you’re walking into an active, productive lab, there is something seriously wrong. That’s actually a very risky spot to be in. You’re going to be very productive in all likelihood. But the flip side of that is you better be really productive in all likelihood.

Unproductive prior grant support can be just as devastating. Unpublished dissertation research, I’m not sure why that would happen, but that can happen and you need to take care of that somehow.

Lack of training—we talked about this—lack of a formal plan. I covered all this stuff. The mentor might be too junior. There are ways to work around that. Just get a really senior, experienced mentor on your team. You could actually have part of the plan being mentoring a mentor. It’s not quite that explicit, but it’s sort of obvious in the organization, or apparent in the organization. Mentoring a mentor can be really a great thing to do.

Innovation

You should be innovative. You know what innovation is.

You should be innovative. You know what innovation is.

Techniques that are not current or appropriate. Tilted to heavily to description—that’s just “no theoretical framework.” To incremental.

Techniques that are not current or appropriate. Tilted to heavily to description—that’s just “no theoretical framework.” To incremental.

And here’s the worst one I was trying to get to. The most painful review I ever did, which was many, many years ago and I’ll never forget it, was an application that was perfectly written. Everything was there. This thing was a poster child for beautiful grants. Just incredible. Everything. Except the idea was just really so uninteresting. The idea was fatally uninteresting. I just did not care how that came out. There’s an infinite number of questions that could be asked, but most of them shouldn’t get anyone’s resources. Their time, their money, their life energy should not be put into most of those questions. We could come up with those questions all day. There’s a tiny, tiny little number of questions that deserve to be asked and the fit into the scientific stream. Those are the ones for you to identify. Those are—a lot of them are just sort of on the ground for you to pick up, and tweak a little bit so they become more innovative.

Research Design

Pitfalls. You have to identify pitfalls and strategies for overcoming pitfalls. That’s a requirement these days. Reviewers want to know that you’ve thought enough about the project to recognize where things might go in unintended directions, and to anticipate those and provide remedies before you encounter those problems. The more of those problems you can anticipate and the stronger your remedies the stronger the application looks. Very, very important. Necessarily risky aspects of the experiment are anticipate and managed.

Pitfalls. You have to identify pitfalls and strategies for overcoming pitfalls. That’s a requirement these days. Reviewers want to know that you’ve thought enough about the project to recognize where things might go in unintended directions, and to anticipate those and provide remedies before you encounter those problems. The more of those problems you can anticipate and the stronger your remedies the stronger the application looks. Very, very important. Necessarily risky aspects of the experiment are anticipate and managed.

Feasibility I think I mentioned. Overambitious, I’m sure I mentioned.

Sample size—using power analysis, using real support values. When you come up with 25 subjects, and your power analysis has some weird numbers in the effect sizes that get you to 25—it is obvious that you just ran the process backward. Be real. Show where your real preliminary data come from. And it can be from existing literature, it doesn’t have to be your own data necessarily. Where does the effect size come from?

Outcomes

Preliminary or hypothetical data. If you don’t have enough time to generate preliminary data, a beautiful thing to do is generate hypothetical data. You can sort of make up a data set and show how you’re going to treat that data set. Run it through your analysis. Show how this data set is going to inform a theory. And show different alternatives. It can really be a beautiful way to do it. In chemistry they call it “dry labbing the experiment.” You can tell people, “I’m going to dry lab this whole experiment. Here’s how it would come out.” It’s beautiful when it works.

Preliminary or hypothetical data. If you don’t have enough time to generate preliminary data, a beautiful thing to do is generate hypothetical data. You can sort of make up a data set and show how you’re going to treat that data set. Run it through your analysis. Show how this data set is going to inform a theory. And show different alternatives. It can really be a beautiful way to do it. In chemistry they call it “dry labbing the experiment.” You can tell people, “I’m going to dry lab this whole experiment. Here’s how it would come out.” It’s beautiful when it works.

Don’t fail to consider possible pitfalls.

Don’t fail to consider possible pitfalls.

Example Reviewer Comments

Here are some comments that are very, very common.

Here are some comments that are very, very common.

“The first two aims have already been completed.” That’s deadly. You’re all done.

“The proposed research appears very unlikely to be completed in the proposed time”—that’s overambitious—“Too many experiments are proposed. There’s no evidence”—that’s feasibility—“the applicant can generate this level of productivity.” That’s also your biosketch. Right? You’ve never published that many experiments in three years, or whatever.

This is also deadly: “The applicant’s weak publication record and lack of a dissemination plan suggest that it is unlikely the proposed research will be published.” None of this matters one whit if you don’t publish it. The grant is not a product. Hiring people is not a product. Your next grant is not a product. They only product we have in science is your peer-reviewed manuscript. If you’re not anticipating peer-reviewed publication, this is really just wasted effort on everybody’s part. You should put it in there explicitly.

If the work has already been done—especially if it has been done by Sesame, Bialy and Garlic in Bagels Today—and provided an unequivocal result, that would be fatal. Unless you’re proposing a replication.

Failing to demonstrate feasibility, availability of subjects, demonstrated expertise, institutional support. These are all things we covered.

Enthusiastic comments, on the other hand—“a strong publication record.” First-author publications in high-impact journals.

Enthusiastic comments, on the other hand—“a strong publication record.” First-author publications in high-impact journals.

“The composition of the team is strong, with highly productive researchers in complementary areas.” So they’re not all doing the same thing, they all bring something unique to the project. And that everything that’s needed by the project is there: statistics, technical support, analysis. You might need a physicist. Everybody you need is on the team.

And “it’s enhanced by demonstrated logistics.” If you’ve got a logistical plan for meeting and collaborating that’s already in place. You’re all set. All you need is the cash, and trip the trigger and off you go.

Preparing for Your First NIH Grant: More Videos in This Series

1. Who Is the Target Audience for Your Grant?

2. How Do I Determine an Appropriate Scope, Size, and Topic for My Research Project?

3. Common Challenges and Problems in Constructing Specific Aims

4. Are You Ready to Write Your First NIH Grant? Really?

5. Demystifying the Logistics of the Grant Application Process

6. Identifying Time and Budgetary Commitments for Your Research Project

7. Anatomy of the SF424: A Formula for NIH Research Grants

8. Common Strengths and Weaknesses in Grant Applications