The following is a transcript of the presentation video, edited for clarity.

Introduction

At some point a number of years ago, I decided that what I want to do when I grow up is to work to try to increase the quantity and quality and value of implementation science.

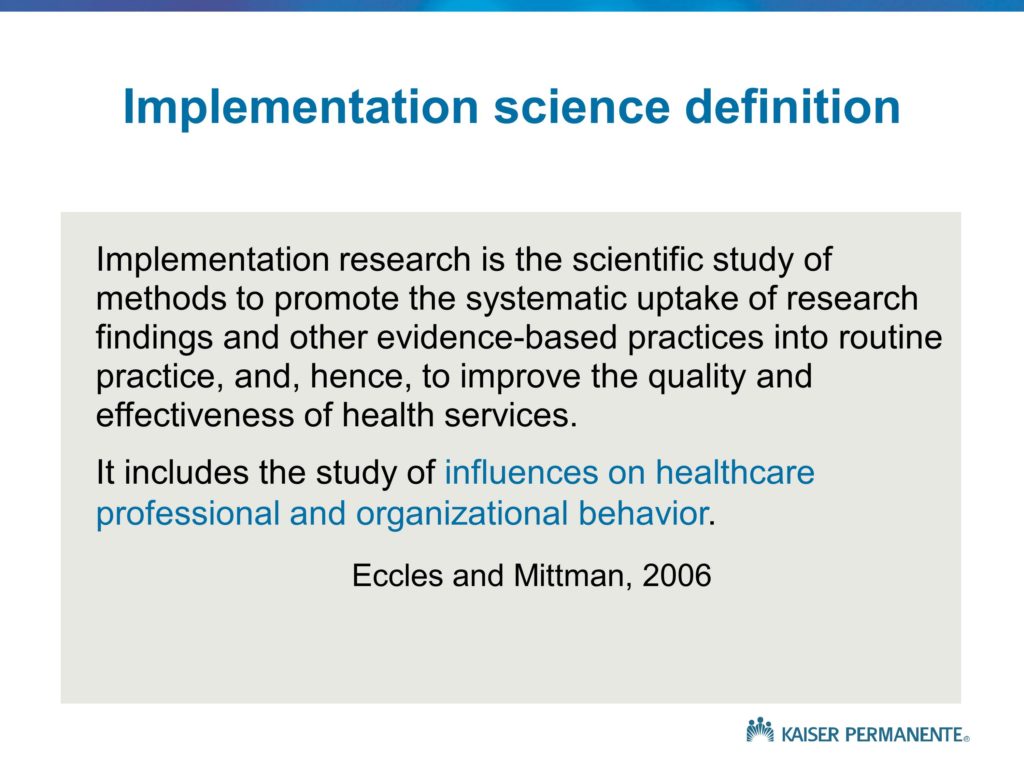

This was based on some experience within the department of Veterans Affairs and the VA QUERI initiative and its work to increase the impact and the uptake of research to improve veteran healthcare. And I recognized through a series of conversations with colleagues that the clinical research is not enough. These findings and innovations are not self-implementing, there is a science of implementation. And that science, as I indicated, needs a fair amount of effort to increase, as I said, both the quality as well as the quantity.

As a consequence, each time I’m given an opportunity to speak and participate and contribute to a meeting like this, it’s a chance that I jump at.

Obviously the close proximity to my home base in Los Angeles, as well as the beauty of the setting were factors as well. But I do appreciate the opportunity.

My task is a little bit different, and someone more mundane I suppose, than that which Dennis was given. And that was to provide a set of frameworks and ways of thinking about what is implementation science, why is it important, how do we go about thinking about the design and conduct of implementation studies.

And perhaps more importantly, what is it about the field in the way that we are conducting our implementation studies that has left us in a situation of somewhat limited impact. We have a very large body of work, but I think it’s fair to say that if we take a half-full versus half-empty sort of perspective on the field, we’re very much on the half-empty side. We have much to do. The impacts and the benefits of the increase in quantity of implementation science activity haven’t yet realized or yielded the benefits that we need.

Much of my talk will focus on presenting a set of frameworks and a set of answers to the question: Why is it that implementation science work has not yet led us to, some might say, a cure for cancer as a way of highlighting the difficulty and the challenges associated with the problem.

But I think it’s fair to say — and I know many of the speakers over the next couple of days will reinforce this idea — it’s fair to say that we could be doing things somewhat differently in order to enhance the value of what we do.

As I said, though, I began at the VA, the US Department of Veterans Affairs, affiliated with the QUERI program — a Quality Enhancement Research Initiative — and that was a program designed to bridge the research-practice gap, to contribute to the VA’s transformation that I’m sure most of you are familiar with during the 90s, and to try to focus the efforts and energies and attention of the health services research community within VA on the problems and issue of the transformation healthcare delivery system. Primarily due to health reform, all of a sudden within the health sector we see a great deal of comparable interest outside VA. So I’ve been in the position of essentially representing or carrying some of the insights and trial and error learning experiences from VA and systems like Kaiser Permanente, the UCLA Healthcare System, that are interested in trying to understand and replicate what VA accomplished and to do so in perhaps two to three years, rather than 20 to 30. I’ve been given opportunities to help share some of those experiences.

In some ways I often feel somewhat like a management consultant. Back when I was in business school getting a PhD, the MBA students used to talk about the management consultation occupation and the job opportunities — and the shorthand label or description of that occupation was that a management consultant essentially steals ideas from one client and sells them to another. In some ways, that’s what I do lately between VA, Kaiser, and UCLA. But it’s all with full transparency, they both recognize the contributions as well as the benefits, and I think it’s all above board.

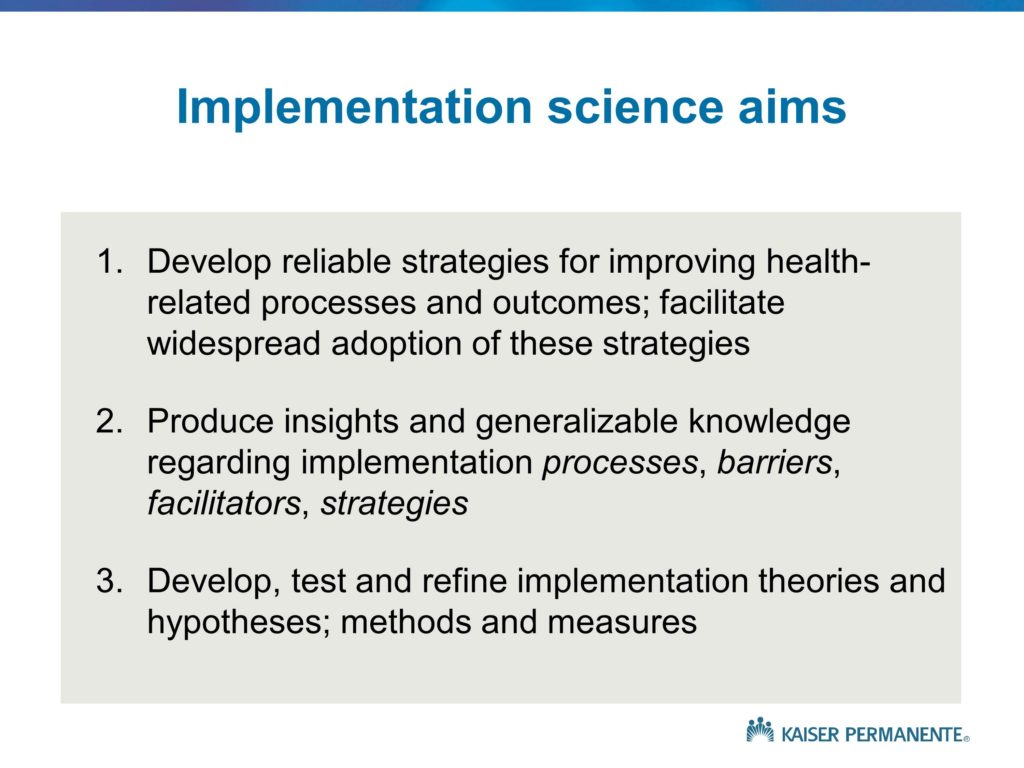

So let me move on and provide you with what I feel is a summary of the key topics or the categories of knowledge that I hope will be covered during the conference, and that to me represent basically what the field of implementation science is all about. My hope is that at the end of this conference, you have a very good sense as to what implementation science is, what its primary aims are, what the scope of the field is. Why is it important? What are some of the key policy, practice and science goals for the field?

How does it relate to other types of health research? And as a researcher with a PhD, a social scientist working in a health care delivery system and health setting, the bulk of my work and what I know about is health. I know the work that you do is at the intersection between health and some of the social services and other sectors. So if you will excuse my focus on health and perform the translation in your own minds from clinical and medical issues to the broader set of issues, that would save me from the burden of trying to do that on my own, and speaking about issues that I don’t know much about.

In addition to the question of how does it relate to other types of health research, one of the key areas of focus of the QUERI program and my presentation will be item number four. What are the components of an integrated, comprehensive program of implementation research? What are the kinds of studies that we need to think about in order to move from the evidence, the kernels, the programs to implementation and impact? That is a bit of a challenge, in part because the components of the integrated, comprehensive portfolio are somewhat different from what we see in other fields.

And finally, the broad set of questions. How does one go about planning, designing, conducting, and reporting the different types of implementation studies? I will not touch much on that issue at all, but that is the focus of much of the other talks.

Implementation Research

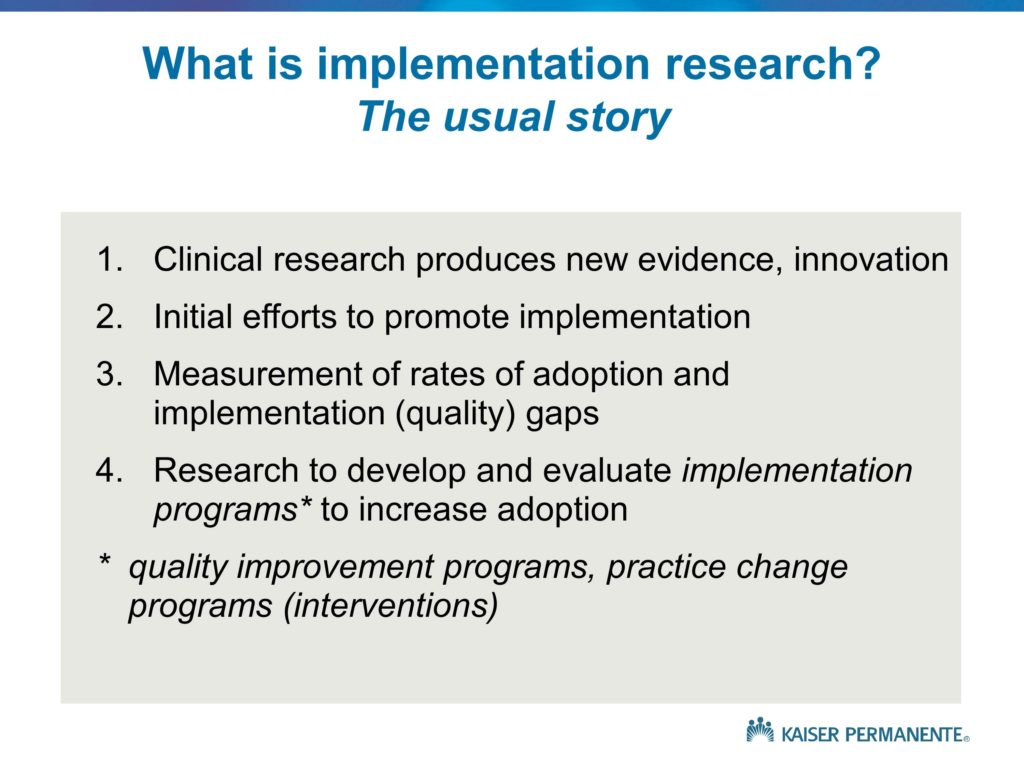

Let me go through a set of slides that provide different ways of thinking about what implementation research is all about, and why it’s important. These are all somewhat different, but largely complementary. This is the usual story that we see, and the usual way that we think about implementation research. And it’s a story that for the most part does not have a happy ending.

I’ll say more about that in a minute. But generally speaking, what we see is the introduction or development, the publication of a new treatment, a new innovation, new evidence. Often, at the time that that innovation is released, we do see some modest efforts toward implementation. Most of those efforts focus on dissemination, increasing awareness. We see press releases that follow the publication of the journal articles. In the health field, we often see greater impacts on clinician awareness when studies are reported in the New York Times or Time Magazine than in the original journals. There are often editorials that are published that accompany the release of the new findings that point out the importance of ensuring they are adopted and used by the practicing clinicians. But by and large these are relatively modest efforts towards implementation, and they focus primarily on dissemination, increasing awareness, with the assumption that awareness will lead to adoption and implementation — an assumption that we know, by virtue of all of us being in this room, is not valid.

A number of years later, we will often see articles published that measure rates of adoption and for the most part show that significant implementation gaps or quality gaps exist. So, yes, there was the publication of this large, definitive, landmark study. Yes, there were press releases, efforts on the part of specialty societies and other professional associations to publicize and increase awareness. There were, at best, small increases in adoption. And often times there are no increases in adoption. That finding — the documentation of those quality and implementation gaps — is the usual trigger for implementation studies. And those implementation studies tend to be large trials, often without the kind of pilot studies and single-site studies that Dennis talked about, that I will discuss briefly as well. Those are large trials that evaluate specific implementation strategies or programs, practice change programs, quality improvement strategies that attempt to increase adoption.

And the reason this story typically has an unhappy ending, is those large trials of implementation strategies tend to show no results. Not only do we see a lack of naturally occurring uptake and adoption, but even when we go about explicitly, intensively, proactively trying to achieve implementation, more often than not — or more often than we would like — those effort fail. It’s that unhappy ending that I will talk about with some of the frameworks and try to explain what it is about the way that we are going about designing, conducting, following up on and reporting on implementation studies that may contribute to those disappointing results.

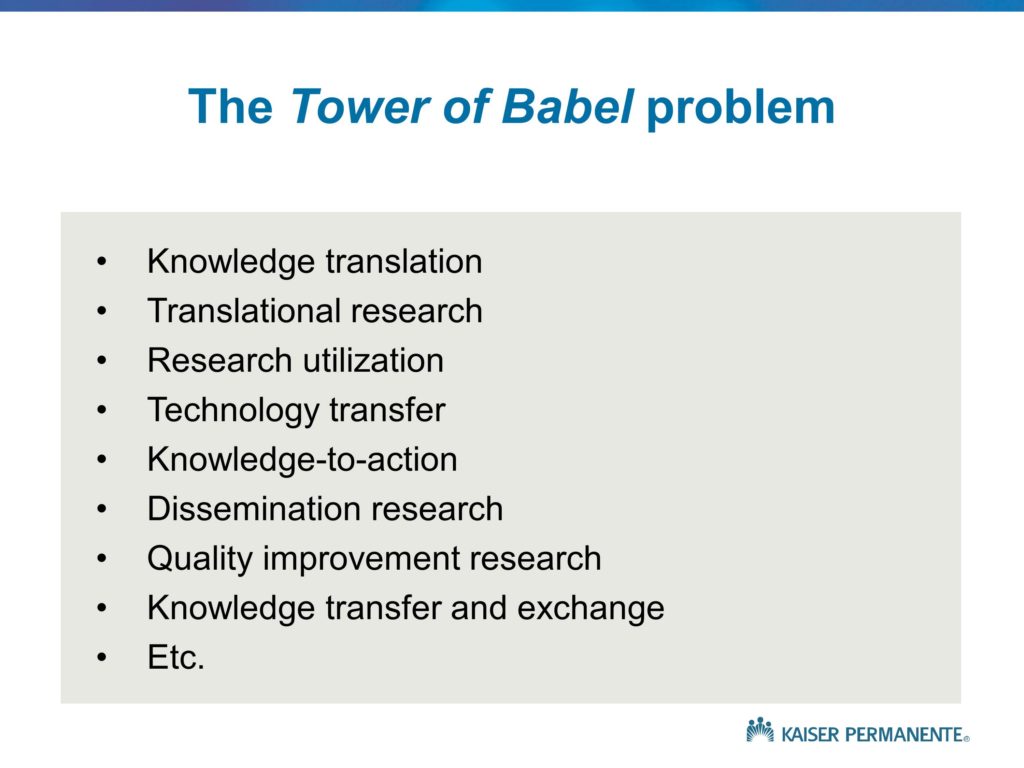

I’ll also point out that implementation programs or strategies, quality improvements or practice change programs — there are a number of different terms, and I tend to use the interchangeably, and I’ll actually talk a little about the so-called tower of babel problem as well.

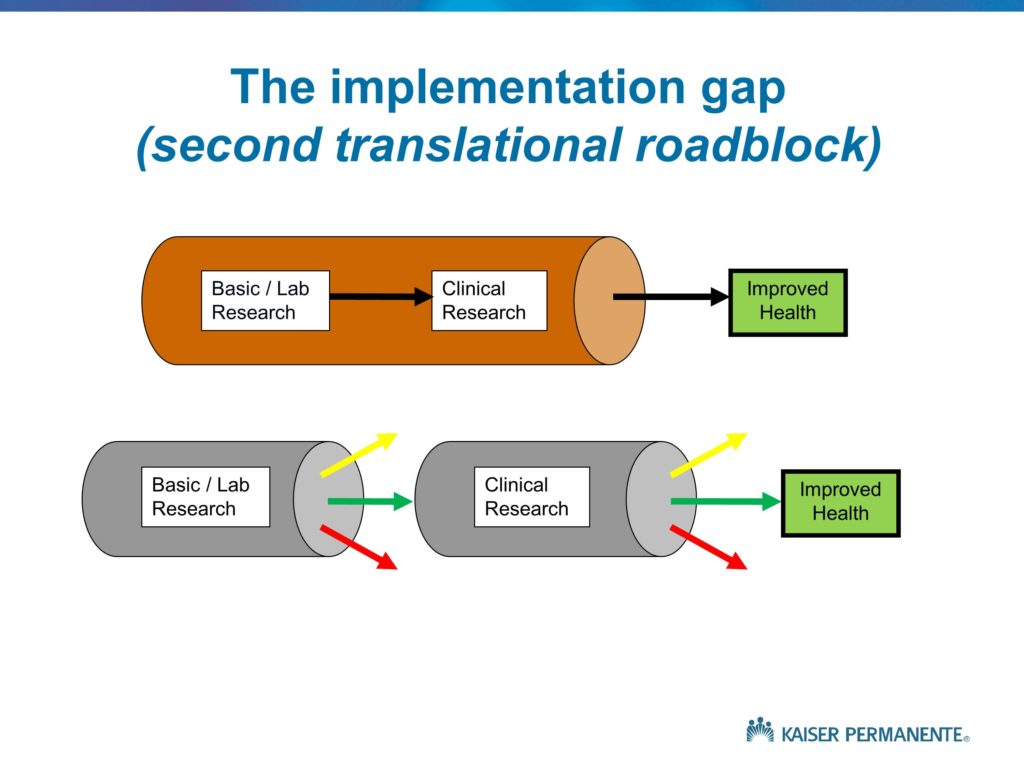

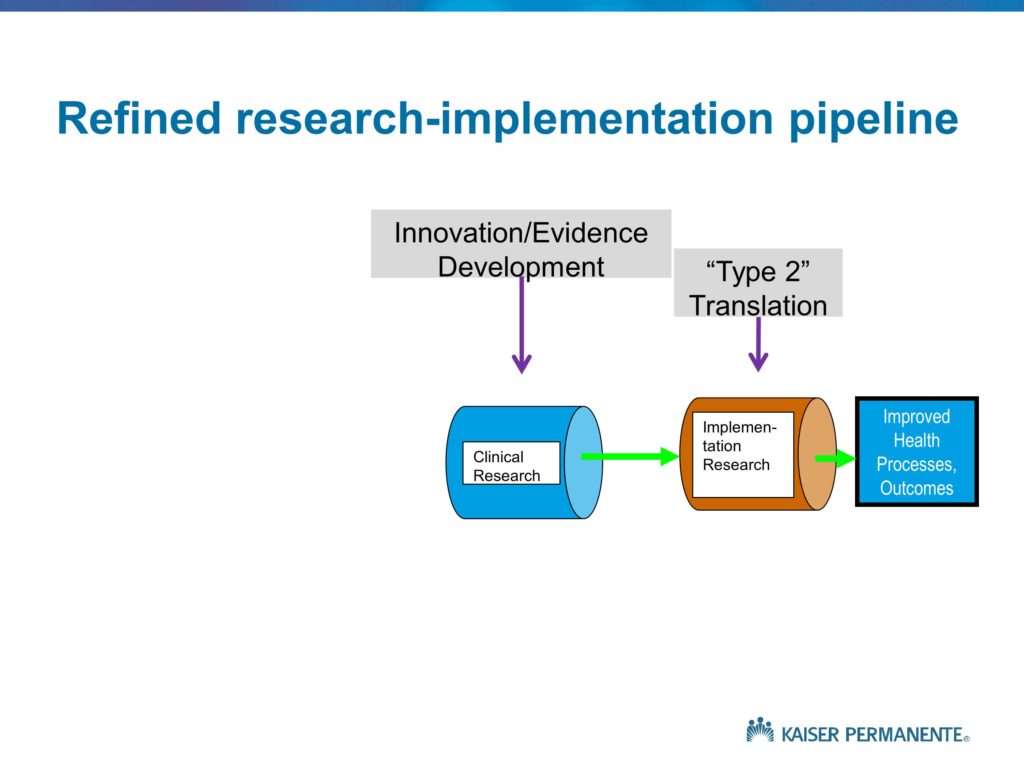

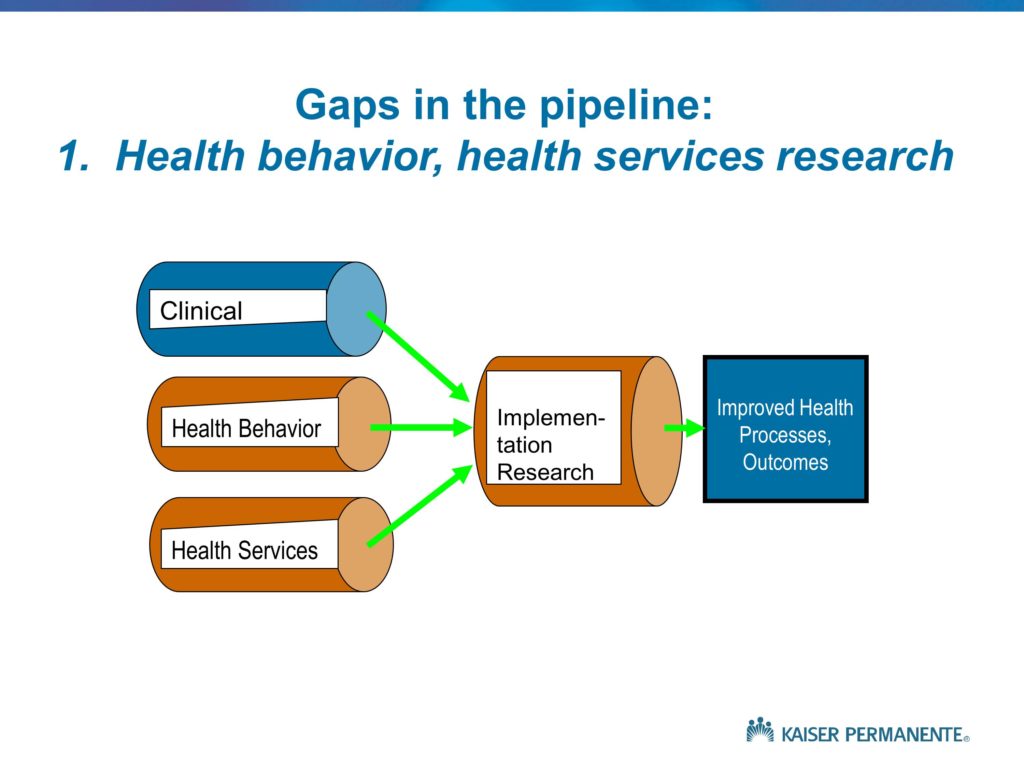

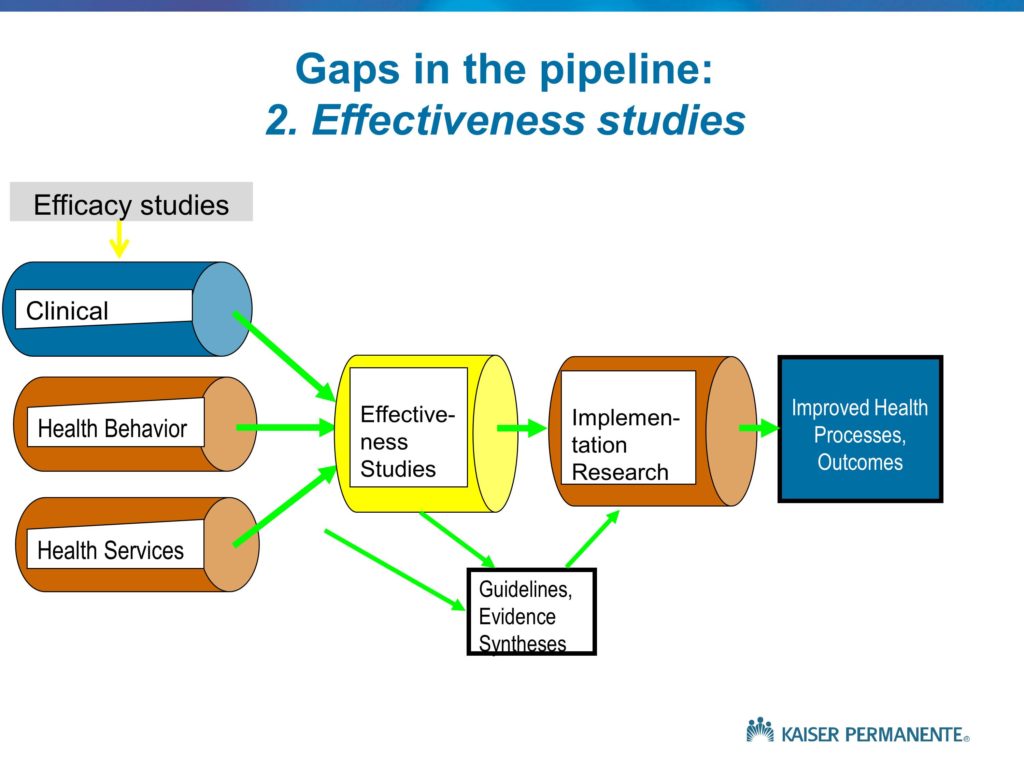

Let me go through a set of slides, again, that provide a number of slightly different ways of thinking about what implementation research is all about. This is a set of simplified diagrams that really derive from some work of the Institute of Medicine Clinical Research Roundtable about 15 years ago, as well as the NIH Roadmap initiative, again in the medical-health field. They basically depict the distinction between what we would like to see in a well-functioning, efficient, effective health research program. That is, basic science and lab research that very quickly leads to clinical studies that attempt to translate those basic science findings and insights into effective clinical treatments. Some subset of those clinical studies do, in fact, find effectiveness of innovative clinical treatments. They’re published, they’re publicized, disseminated and we see the improvements in health outcomes.

As opposed to that idealized or preferred sequence of events, what we tend to see is depicted by the bottom set of arrows. The yellow arrows, if you can see those, depict the idea that some proportion of basic science findings, as well as clinical findings, in fact should not lead to subsequent follow-up because they don’t have any value. There is no reason to follow up. A treatment or therapeutic approach that we evaluate for which the harms exceed the benefits is one that should be published, should be well-known, but of course will not lead to improved health. So the yellow arrows are to be expected, they are appropriate.

It’s the red arrows we would like to eliminate. The findings that are published, that have some value, that sit on a shelf for some number of years and then eventually are taken up and moved into the next phases.

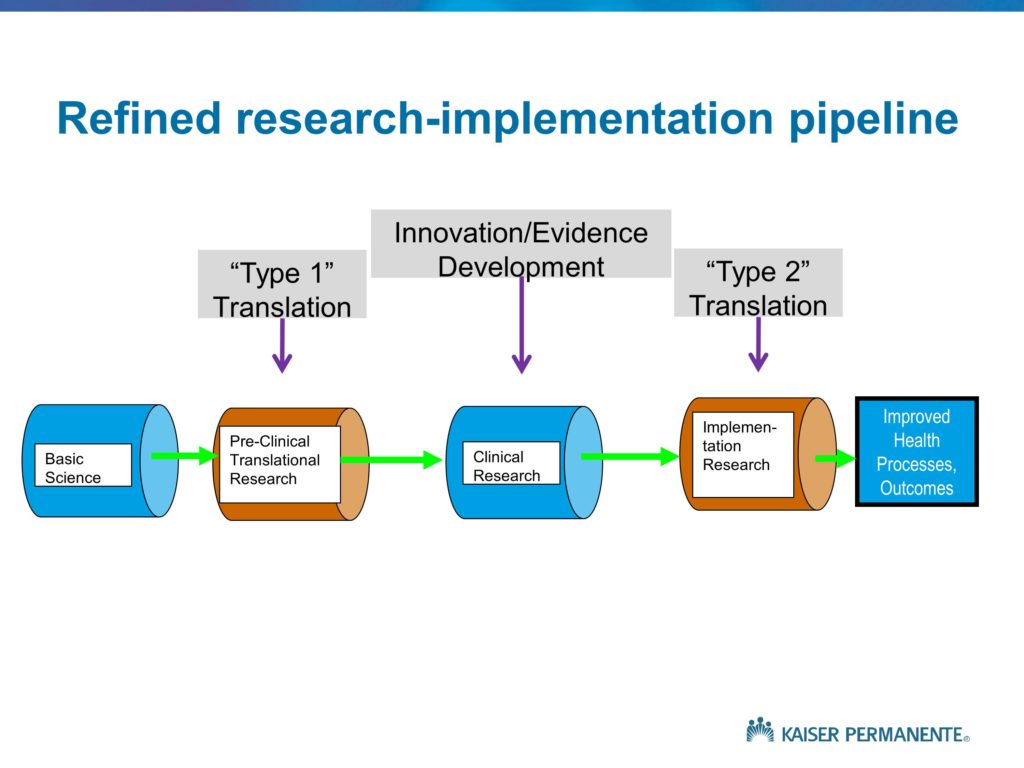

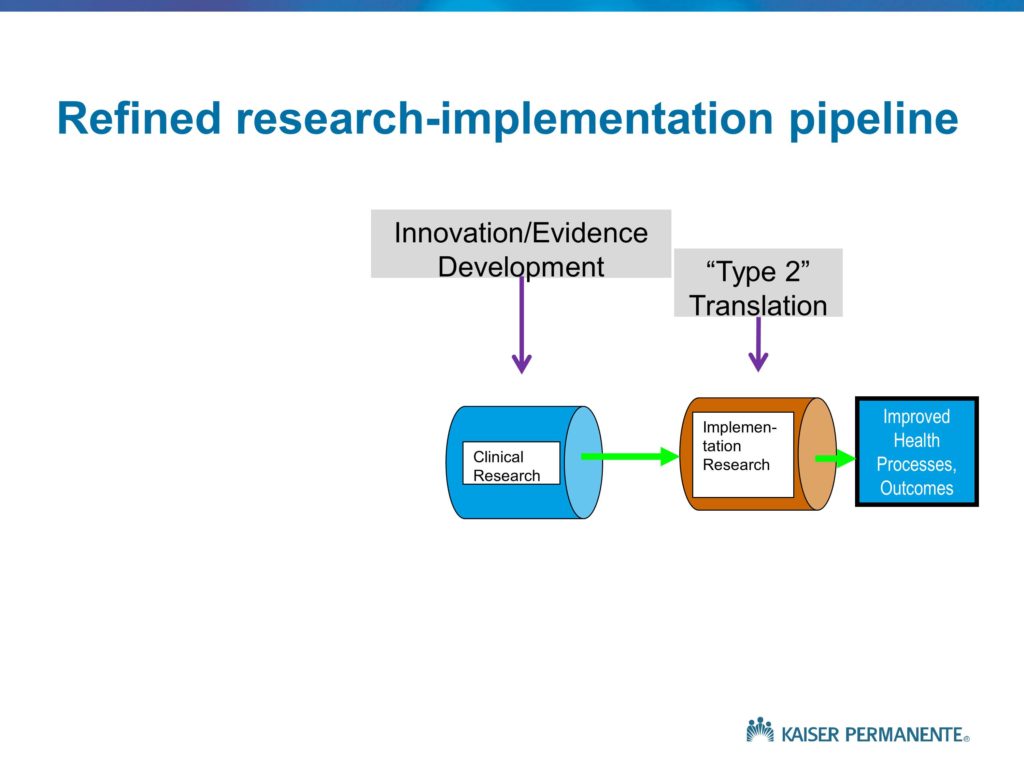

This is just another way of depicting these different activities. Of course, in the NIH Roadmap initiative — and those of you who are familiar with medical school and university campuses that have funding for a CTSA or CTSI, the NIH Clinical Translational Science Award program. The bulk of that funding, and the bulk of the NIH investment, as is usually the case, focuses on Type 1 translation. Translating basic science findings into effective clinical treatments. Some label this feeding the pipelines of the drug companies. I won’t spend any time down that path. But the point is, much of the NIH investment is on the Type 1 translation.

Our interest, of course, is in Type 2 translation. Understanding the roadblocks that prevent a significant proportion of the innovative treatments and findings from clinical research moving into clinical practice or achieving their intended or hypothetical or potential benefits and impacts.

Gaps in the Pipeline

Quality Enhancement Research Initiative (QUERI) Framework

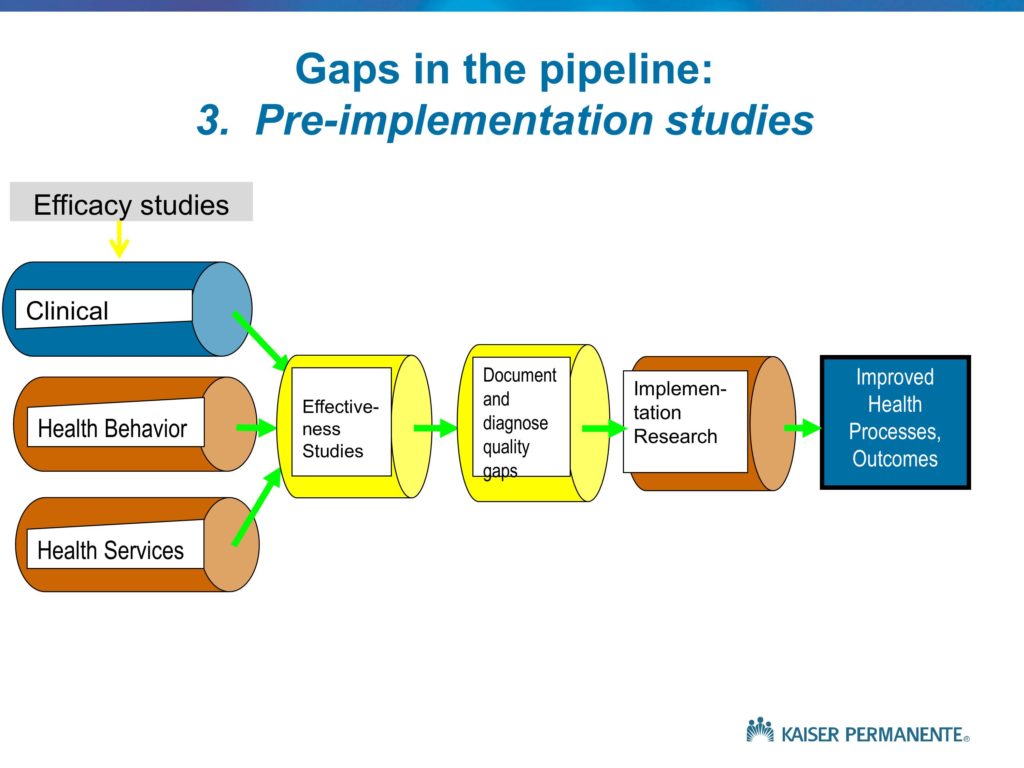

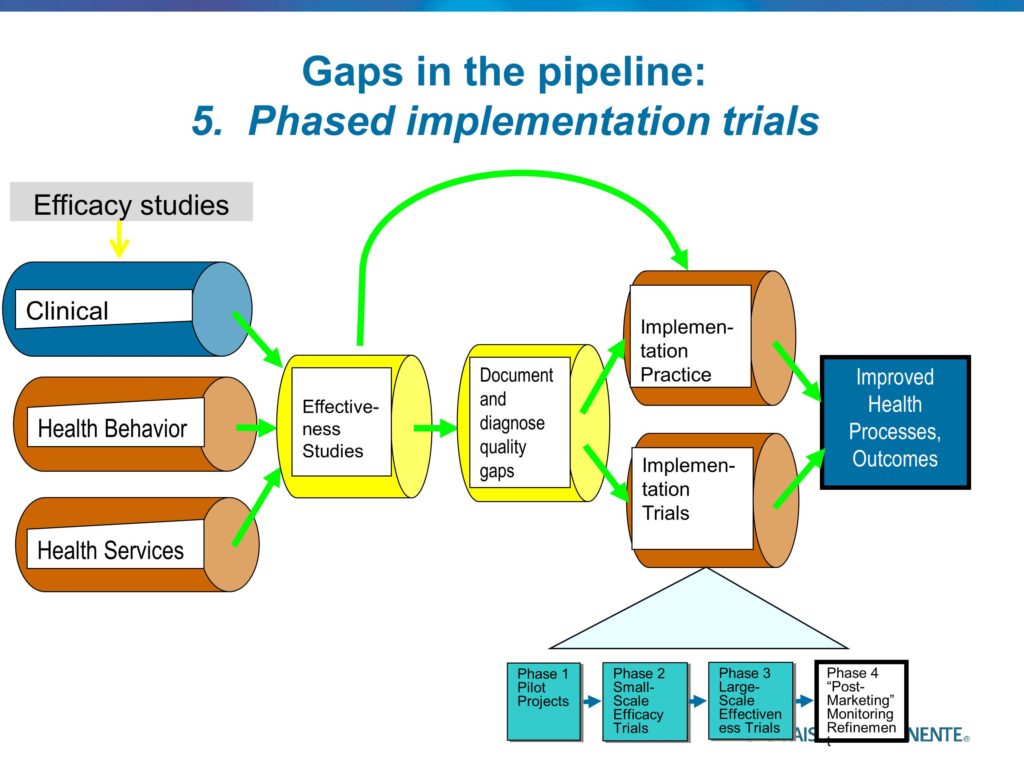

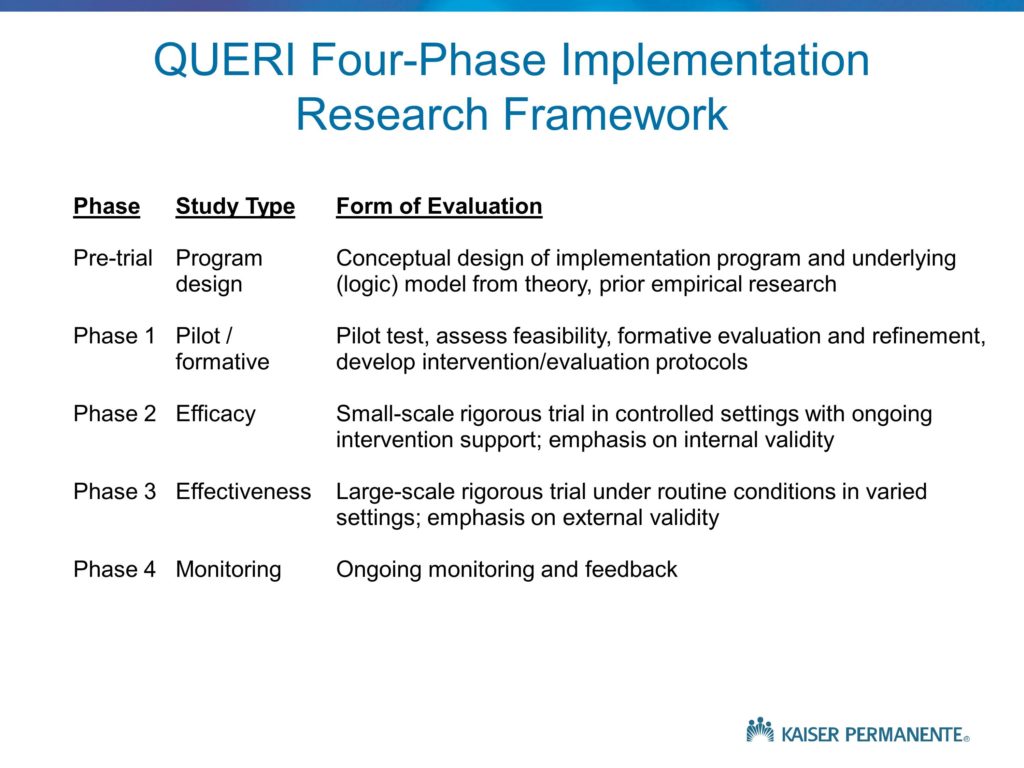

This, which is my last slide, is an attempt in words to describe the different phases. It points out, again, under Phase 1 the need for us to conduct the single site case studies. In the QUERI program, the Quality Enhancement Research Initiative, which we launched in 1998, there was great deal of pressure to show impact and show value very quickly.

As good medical researchers, we as QUERI researchers very quickly designed large, rigorous randomized trials of implementation strategies, and we very quickly learned large lessons as to why those implementation strategies were not likely to be effective. We discovered some barriers and some flaws in implementation and those practice change strategies very quickly. But these were trials. And in a trial you maintain fidelity, and you maintain fixed features of the study so you can show with high levels of internal validity the intervention-controlled differences. There was reluctance to allow for any of the modifications that, in many cases, were staring at us very clearly as to what was needed.

The single-site pilots — or two or three site pilots — allows us to very quickly learn those lessons very cheaply and quickly, in two to three months. The issue of funding within VA we have the advantage of core funds provided to the QUERI centers that allow them to fund these pilots internally without going through a six to twelve-month grant process. It may be, as was suggested, that’s a potential role for the Foundation. But again, we need to continue to work on NIH and NSF and our other funding agencies to point out to them that moving immediately to a three or a five year, $500,000, $5 million RCT without first doing the pilot funding is inappropriate and a waste of the research funds, as well as the time and effort of those sites that are participating.

We need to begin with those Phase 1 pilots. We then need to move into the efficacy-oriented small-scale trials that will tell us something about the likely or theoretical effectiveness of a given practice change strategy, under best-case circumstances. Which, in the case of implementation studies that are funded with grant support, often means high levels of study team involvement at the local sites in providing technical assistance and support, in exhorting the staff to keep with the program and follow the protocol, oftentimes the funds are used to support new staff and to provide for the training and supervision. Often we have Hawthorne effects due to the presence of the research team, and the measurement. As with any efficacy study, this is a method for evaluating whether, under best-case circumstances, a practice change strategy can be effective.

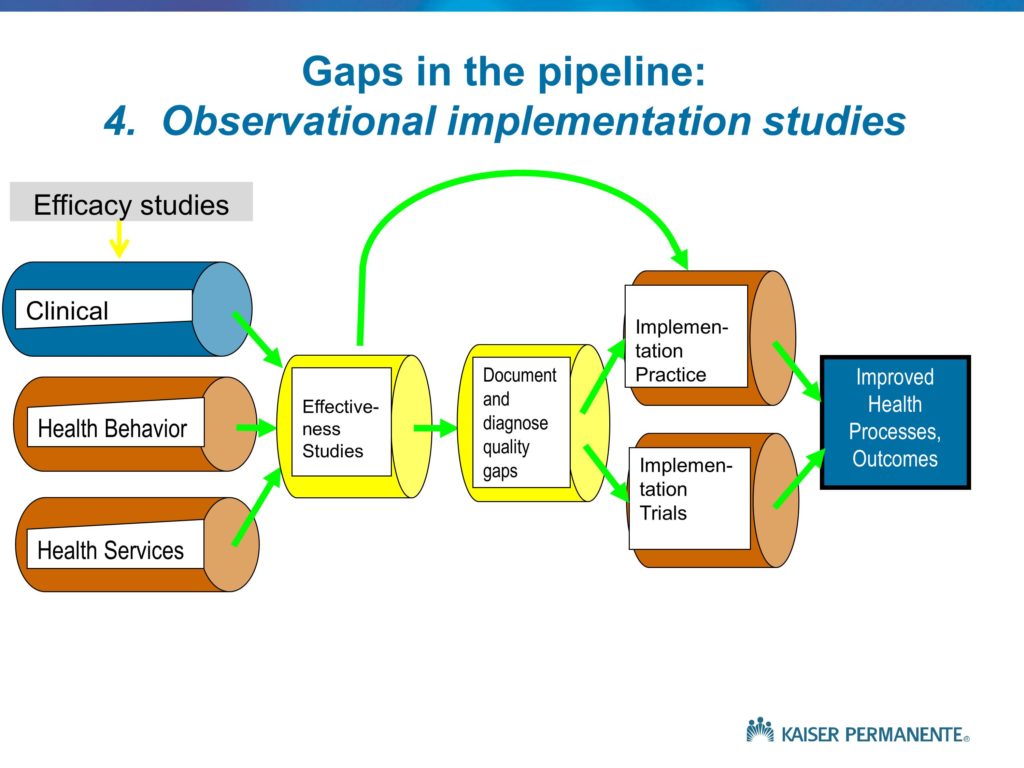

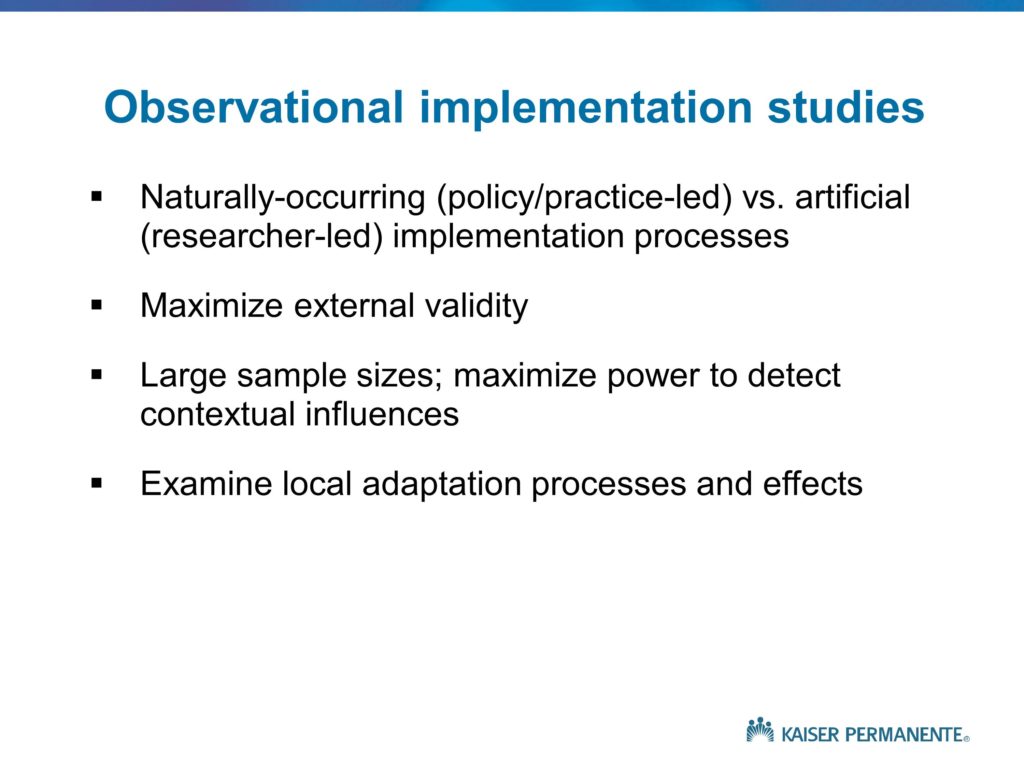

We often, in the implementation field, complete those studies, write them up, and essentially brag a bit about our success in improving quality. And then we walk away and go onto the next innovation, the next innovative practice change strategy. As is the case with the clinical studies that we use to develop new evidence and innovations, the efficacy oriented studies are not enough. We need to follow those with an effectiveness study, where the grant support does not provide additional resources, where the study team is not onsite on a weekly or monthly or daily basis. And where we have conditions of effectiveness research. We need to demonstrate in a much larger, more heterogeneous, more representative sample of settings and circumstances that this innovative practice change strategy can be effective. Then and only then can we turn over that practice change strategy, in the case of the VA, to VA headquarters. And encourage the national program office responsible for quality improvement, for example in HIVAIDS care, to deploy that program nationally. And at that point, as with any Phase 4 study, our role as researchers is to provide arms-length monitoring and help to observe how the program is proceeding, whether it warrants refinement, what are some of the areas where the program seems to be working better than others and why that is.

Again, another framework that serves to guide the design and conduct of an integrated portfolio of implementation studies. Before concluding, what I should say is these are idealized frameworks, many of them in fact are not completely feasible because the number of years it would take us to go through each of these is beyond what we should be spending. I mentioned but didn’t talk about hybrid studies that combine elements of clinical effectiveness and implementation research. There are also hybrid studies that combine elements of pilots in Phase 1 or Phase 1 and Phase 2. These are also very linear, rational kinds of frameworks that don’t necessarily describe the way the world works as much as the way that in some sense we might like the world to work. I won’t spend any time trying to talk about how it actually does, I know that will be covered to some extent in the subsequent presentations. It also is an area that needs more activity and research and contributions from all of you.

Again this is to provide an answer to some of the key questions what is implementation science, how does it differ from other forms of research, and how do we go about thinking about the design and conduct of a portfolio of implementation studies. With that, let me stop and open for any questions.

Questions and Discussion

Audience Question

We know a lot about the basic physiology of speech production, and we are developing a lot of strategies to help people sound better. It’s that implementation part: We get them sounding good in treatment and say, “Go out and communicate well.” We know so little about how people with communication disorders function in the real world. You used the term root cause analysis — Would you talk a little bit more about what you mean by root cause analysis and how we might implement that in our situation?

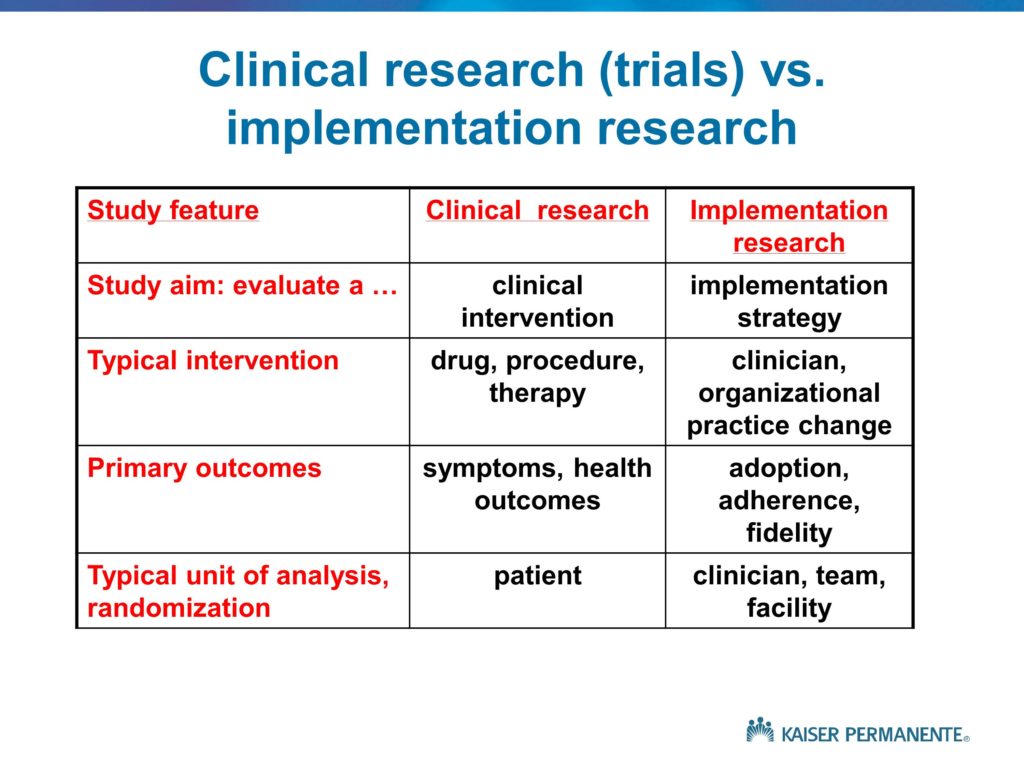

A couple of thoughts. One is that, if I’m understanding you correctly, I would characterize the issues and the problems you’re describing as within the domain of the clinical research, rather than the implementation research. If we talk about the effectiveness or lack of effectiveness of the clinical strategies that are used to support the clients, the patients, and to achieve better performance on their part, and those don’t have lasting effects. I would see that as a flaw in what I’ve labeled the clinical intervention.

There probably is a role for root cause analysis in trying to understand what it is about those clinical treatments that don’t allow them to have sustained effects. But the implementation research would focus on the goal of trying to ensure that the therapists are delivering those effective clinical strategies. Root cause analysis in the implementation world is all about what is it about the therapists training or their attitudes toward evidence-based practice, or the kinds of constraints that they deal with in their daily worklives. Lack of time, lack of support, lack of skill, to prevent them from delivering those therapies with fidelity. It does sound to me like the problem here is in the limited effectiveness of the clinical treatments.

Audience Comment:

Let me just give you an example. I really do think it’s in the implementation. We talk to a lot of people about what did you like and not like about our treatment they say, “I was discharged at X amount of time because my funding ran out.” When you go to clinicians, “I stopped treatment not because it was not needed, but it was because I didn’t have the funding.” That’s not a part of our treatment, that’s a part of the implementation policy.

Brian Mittman:

Sure, you’re right. And in some ways, a combination. Because the treatment that is efficacious under best case circumstances, but is unfeasible is not likely to be effective. And the effectiveness or lack thereof, limited effectiveness, is a combination of some features of the treatment that are not scalable, as well as some features of the implementation process and the broader context that doesn’t allow the clinicians to provide the proper training and use the treatment in the way it was designed and intended to be used. Again, I think the root cause analysis, to get back to your original question, is appropriate for both of those. It is a matter of understanding, is it a matter of comfort on the part of the therapist, is it a matter of the regulatory or fiscal policies not being supportive? Do we lack the kind of equipment that we need? And so on and so forth. Those are the kinds of potential causes for poor fidelity and poor implementation that we need to understand.

Much of the early work in the implementation field in medicine focused on better strategies to doing medical education. That assumes that the problem is education. Often times clinicians know exactly what to do. They don’t have the time, they don’t have the staff support. Patients are resistant. There are a number of other barriers. No amount of continuing education will overcome those barriers, so we’re solving the wrong problem. That’s where the root cause analysis or the diagnostic work is all about identifying the causes so we can appropriately target the solutions.

Audience Comment:

And the methods are interviews? Or going out to stake holders?

Brian Mittman:

Keep going.

Audience Comment:

Or looking at databases?

Brian Mittman:

Keep going. Yes. All of the above.

Audience Question

Could you say a little bit more about the blurriness, or trying to handle the blurriness, around establishing efficacy? Do you really need to have established efficacy before you’re going to study implementation? And how can we contend with not having that be unidirectional — but that the implementation affects the efficacy. I may be pushing you to talk a little bit about the hybrid models.

I think the hybrids relate to both of the previous two questions. One of the papers I will circulate lays out the hybrid concepts. The idea is that this linear process would take far too long. We often have enough evidence that something is likely to be effective. And we also have enough concern about the effectiveness, depending on the implementation strategy, that we really need to do both at once. So there are instances where, first of all, where we are conducting an effectiveness study and we begin to collect implementation-related data. If we are evaluating an innovative treatment in a large, diverse, representative sample of sites without providing the kinds of extra fidelity support that we do in an efficacy study, that’s our best opportunity to begin to understand something about the acceptance and likely use, and barriers to appropriate use of that clinical treatment. So we begin to gather implementation-related data.

But when we are focusing on the implementation strategy, we need to continue to evaluate the clinical effectiveness and the clinical outcomes. If we have a truly evidence-based practice, and we know from a large body of literature that delivering that practice will lead to better outcomes, we can focus only on implementation. We know that if we achieve increased utilization of that practice, those beneficial health outcomes will follow.

But oftentimes that evidence base for the clinical questions is not sufficiently well-established. There are interaction effects between clinical efficacy and effectiveness and implementation and fidelity, and we need to be doing both simultaneously. That’s what the hybrid frameworks are all about. Studying the success and effectiveness of a practice change strategy to increase adoption, to increase fidelity, and continuing to measure clinical effectiveness so that we can determine whether we see the benefits as this practice is deployed in real circumstances, with different types of implementation support or practice change strategies.

So, our work on the clinical effectiveness side never ends. And again, distinguishing between these different aims and understanding their implications for sampling and measurement and analysis and so on is one of the key challenges, and one of the goals of the paper I was involved in drafting with some VA colleagues.

Audience Question

We’ve been using a program for about 14 years, adopted from Australia, called the Lidcombe program. There are many studies by the group in Australia and ourselves as well showing that it’s efficacious. Now it’s important for us to move into the effectiveness domain. But after reading Everett Rogers, I’m highly aware that the context is really important, that the culture is really important. I wondered if you had any tips about how to assess the resistance, because there is a lot of resistance, particularly in the United States, to this program. I’m thinking okay, this is a cultural issue. Is it the culture of the speech-language pathologists who are working with pre-schoolers that has been influenced by some of the belief that you shouldn’t talk about stuttering to a pre-schooler? Or is it a problem of the setting? In other words is it not easy for the SLPs to make it work in their setting? Do you have any tips?

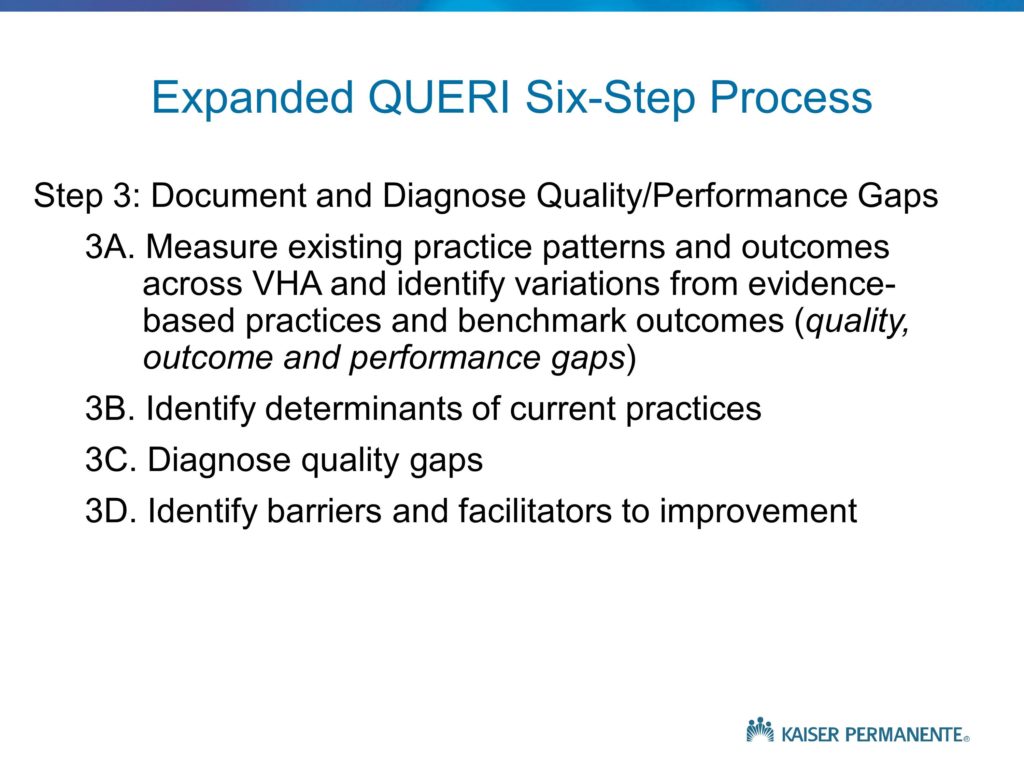

Again, I think the answer is probably all of the above, but if you recall the QUERI step 3 slide, where I showed the distinct steps in the diagnostic process, one of those steps is a pre-implementation assessment of barriers and facilitators, and the idea that we spend some time using the methods that were mentioned — interviews and observation and so on — to try to understand and make some educated guesses as to the response to an effort to implement this therapeutic approach, and to identify some of the key barriers and see what we can do to overcome them. But ultimately, we won’t identify those barriers until we actually get out and begin to implement the program. Was it Kurt Lewin who talked about the need to attempt to behavior as a way of understanding barriers, rather than in an a priori manner, assuming we can correctly project them.

There are some other frameworks in the field that focus on the multi-level nature of influences, barriers, constraints to practice change, and talk about the different kinds of strategies that would be needed to address the individual clinician factors, the setting factors, the broader social-cultural, regulatory, and economic factors. Typically the answer to the question, “What is impeding implementation?” is: All of the above. It’s all of these factors.

And our implementation studies only tend to focus on one or two. If we only focus on education and knowledge, again, we’re doing nothing about that broader spectrum of factors. The answer to the question is, the barriers and the influences occur pretty much everywhere we look. It’s a much more complicated set of problems, and we need to be thinking about and using another set of frameworks within the implementation science field in health that identify contextual factors, beginning from the regulatory and broadly social-cultural, all the way down to the front line delivery point-of-care factors. And think about all of those as potential barriers to practice change, as well as potential targets for our implementation efforts and our practice change efforts.

Audience Question

The rapid and frequent changes in terms of healthcare reimbursement has caused me havoc in terms of my treatment efficacy research. For example, right now I have a person with severe apraxia of speech who is turning 65 in a month. As soon as she turns 65 there is a serious effects of the therapy cap and that sort of thing. Well, we’re changing our treatment to do more functional communication, but what I really want to do is continue my study to see whether this treatment I’ve devised is going to help her with effective communication. So, I know you can’t solve that problem, but I want to bring it up as an issue for discussion. I’m anxious to hear more about the hybrid studies, and any advice people can give us when we have to interrupt our nicely designed single-subject designs in the middle to meet the patient’s real needs, related to reimbursement.

I can offer one quick response, then I know Dennis, I’m sure, has more to add. My response is we need to be thinking about doing both. By both I mean trying to develop and evaluate therapeutic approaches that we believe will be effective and feasible given the current socio-economic, regulatory environment. But at the same time developing therapeutic approaches and studying and evaluating them even though we know that right now they’re not likely to be feasible, sustainable, scalable.

The reason is when we demonstrate significantly greater effectiveness, that’s the evidence that we need to lobby for the changes. I think the important thing is to recognize from day one — there’s an important concept in the field, and someone may talk about it later, of designing for dissemination. It probably should be designed for implementation, but that doesn’t sound quite as good. Thinking from the very beginning about designing for feasibility — but we shouldn’t limit ourselves to the kinds of approaches that are likely to be feasible. We need to be more innovative at the same time.

Dennis Embry:

One of the things that came to mind is, one, I think it’s really important to do the eco-anthropological type of investigation before we go do one of these things. Because one of the things you find out is all these little stupid barriers.

I loved your idea about looking at the regulations. I think concurrently going to the end point and observing a whole bunch of people just having that therapeutic interaction tells you a whole lot about what are the contingencies. I’m reminded, early on in behavioral analysis people talked about doing an eco-behavioral assessment before you actually designed an intervention to pull out some of these pieces.

The other thing I think is often forgotten is Trevor Stokes’ paper and Don Baer’s paper on the technology of generalization. And so many people don’t think about the generalization features in the design of their study. Your presentation convinced me that the thing I decided to start doing a long time ago was, whenever I’m in a Phase 1, just run it as a mini-effectiveness trial rather than an efficacy trial because, if I do the efficacy thing and it works, but it won’t work in the real world it’s kind of dead. It might be a good thing for a publication but — I’m learning we have to think about the effectiveness right off the bat.

Brian Mittman:

And I think that is the key point to think about these issues. There aren’t necessarily right or wrong answers, but to be aware of whether you are studying clinical effectiveness or implementation or both. Understanding whether it is an efficacy or effectiveness study. And thinking down the line, what is the next step? What follows this study It’s not enough for us to complete our work, publish it, and say, my job is done, someone else will come along. That’s what leads to these roadblocks and these long delays in progress. That’s what leads to the criticism that too much research is beneficial for the academic’s careers, but doesn’t have much value or benefit for society and that’s not why we’re here.

Audience Question

I really love the sort of diagnostic paradigm you’re putting on this problem, and it makes me feel like this is our business. We are supposed to be doing diagnostic root cause analyses before we do our clinical interventions, and we need to do that here, as well, in research. I work in the area of swallowing disorders and one of the interventions that’s attracting a lot of attention is the idea that people need to clean patients’ mouths to deal with bacteria formation. If you go to the literature there are, first of all, arguments about who is supposed to be cleaning people’s mouths — but it’s really the most depressing literature I’ve ever discovered about how hopeless in-service education is. I’m just wondering whether you have a provocative paradigm shift to offer about in-service education because I think we’re sort of perpetuating the same problem.

To me the phrase that captures this concept is, “necessary but not sufficient conditions.” The education is almost always necessary, but it is not sufficient. Thinking about necessary but not sufficient conditions for practice change, and thinking about multi-level, multi-component kinds of practice change interventions and programs. I actually, in addition to my dislike of the T-work, I dislike the I-word for practice change. These are not interventions, these are implementation programs or campaigns that are multi-faceted, they have multi-components, and the clinical intervention is what we try to implement using an implementation strategy or program or campaign. The needs include multiple elements that we sometimes mix and match –sometimes within a single study at different sites. Because in some cases this is a leadership problem or a culture problem, and in other settings there is not. It makes for a very complicated but very interesting set of challenges. We need to acknowledge, rather than ignore and hope that through the magic of randomization all these factors will disappear.

The main effect of any given component of an intervention — education and others — is very, very weak. And without combining a set of intervention components within a campaign or program, we’re not likely to see any practice change, let alone sustainable, widespread practice change.