The following is a transcript of the presentation video, edited for clarity.

Defining SUSTAIN(ability) and Historical Assumptions

When we think about defining sustainability, I go to Merriam-Webster, there’s a lot of different definitions. Some of them, I think, embody what is often thought of as sustainability in this space more than others. There’s a lot of focus on how do we keep up, how do we prolong something being delivered. How do we make sure it bears up under whatever stress to the system might interfere. How does it support what is true, is legal, is just. The idea that something sustains over time means it stays equally valid as it was before.

This idea that it’s confirming. Enabling us to once again reify that the evidence base that we had developed previously still holds.

There’s also this cousin, I think you’ve heard already about — you’ll hear more about — the RE-AIM model, where the M is “maintenance.” When we think about maintain, again, Merriam-Webster defines it as “keeping it in an existing state.” I think what Larry did a very nice job of was, right from the get-go, talking about these challenges between, how do we deal with something as it was originally designed versus the likely adaptations — that whole fidelity/adaptation challenge is at the center of our thinking about sustainability.

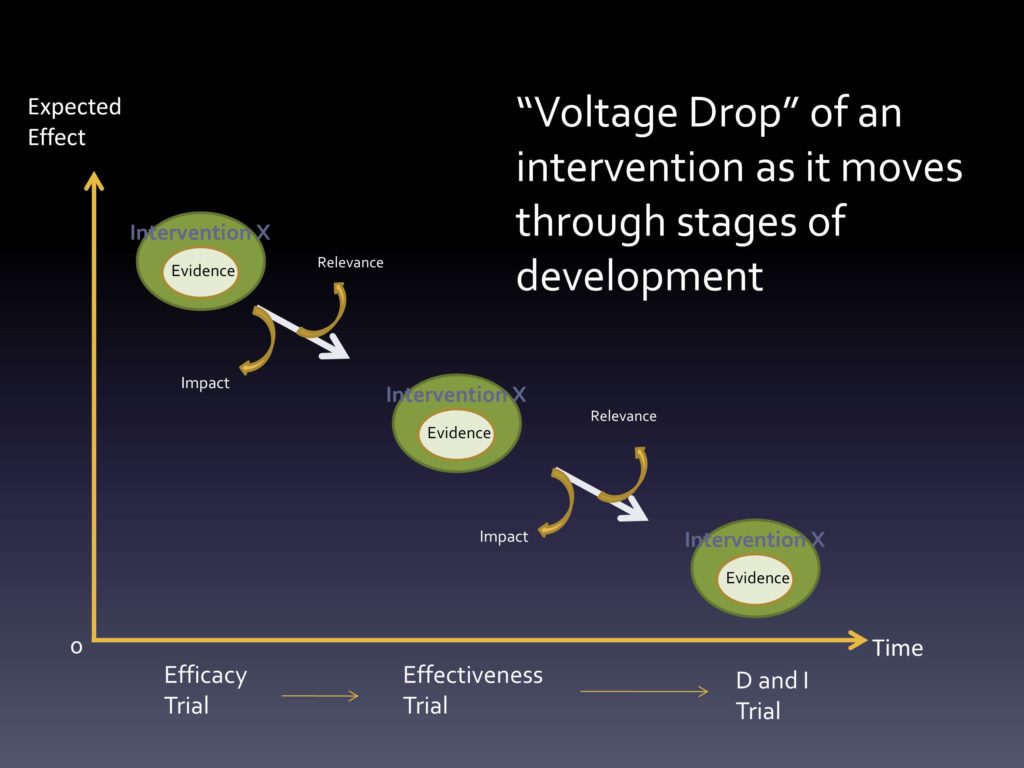

The challenge is that if we define it in a particular way, it may limit our ability to actually arrive at what we might see as an optimal solution. Because if we fix certain parts, we may interfere with the long-term success. And that’s where I’m starting from.

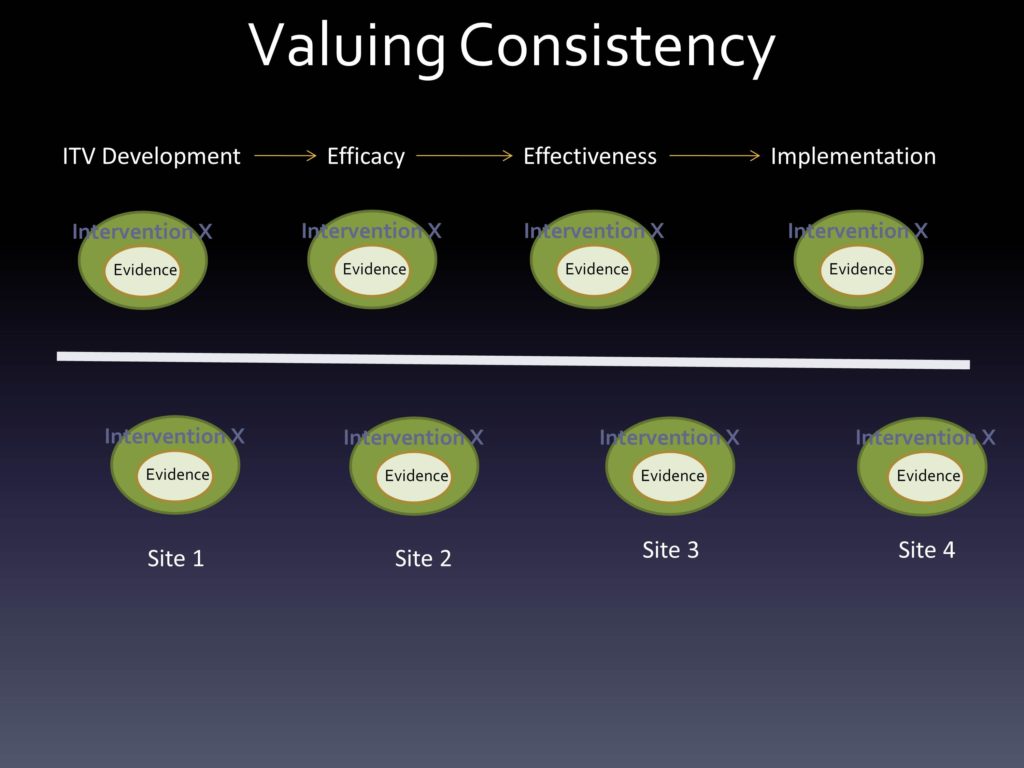

The historical assumptions that I think carry from some of these ways of thinking about sustainability are that interventions are developed, the evidence is gathered, and then they are basically “frozen.” We’re trying to say, “Don’t mess with that. We’ve already established it.”

Fidelity to the intervention is often assumed to be optimal. You have a fidelity scale, and the higher up on that scale, we’re at least assuming that that’s going to mean that people are getting better outcomes.

We see context as a threat to fidelity, and introducing that “noise.” And who wants noise, right? You want that pristine silence, not a bunch of really happy six-year-olds running around screaming all the time.

And sustainability is often seen as the enemy of change. We’re trying to keep something as it was designed as much as possible.

Also this sort of thing where we pick a particular time point — it may be arbitrary — where it’s gone from being implemented to all of a sudden, we’re in that sustainability phase. We’re there, it’s frozen, we’ve already passed that and we’re good to go.

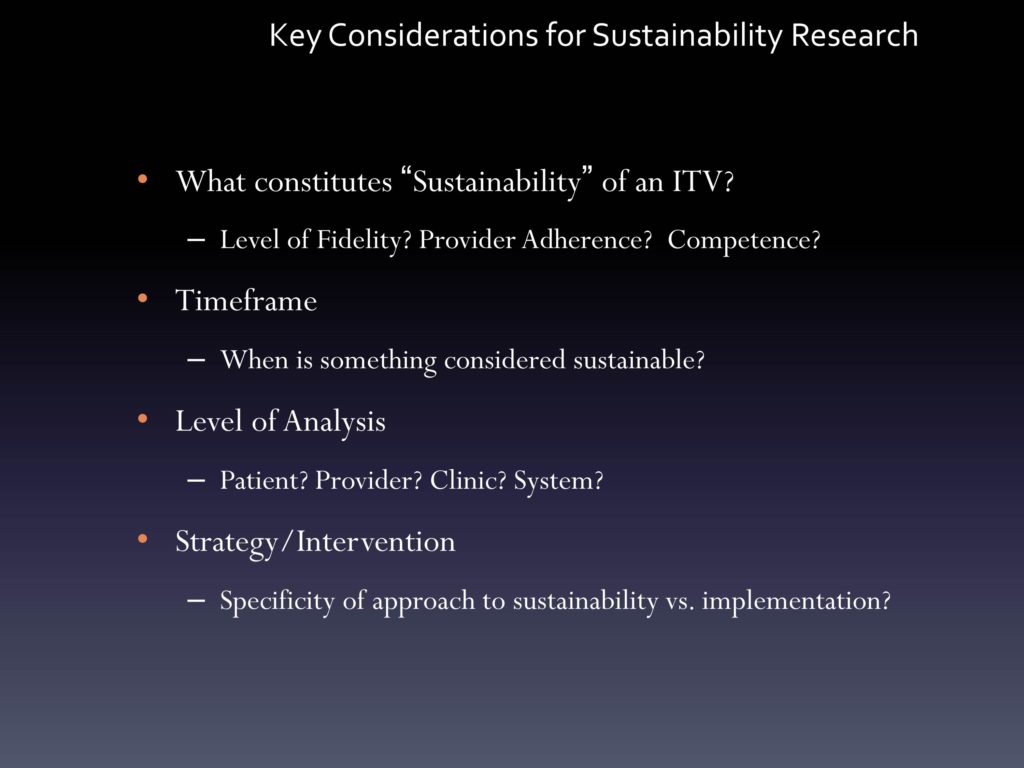

Key Considerations for Sustainability Research

When we think about some of the sustainability research questions that come up, in some cases they don’t necessarily work so well if we take on all of those historical assumptions. If we start to ask “what constitutes sustainability of an intervention,” we may have to struggle with “what is good enough if fidelity is how you define sustainability.” Is it also provider adherence to a particular protocol? Is it the competence with which you are delivering an intervention?

We have to challenge ourselves to think about timeframe. Really ask that question of when is something considered sustainable.

We need to think of sustainability at different levels of analysis. So sustainability for the patient continuing to receive an intervention as it was designed. The provider continuing to deliver it at the clinic. Or the broader system continuing to offer it.

Then we think a lot about, within implementation, this question of how far can we get towards sustainability if we’re simply extending implementation outward. Do we need specific approaches that are basically focusing on sustainability as the primary aim, not just thinking about implementation down the road.

These are challenges that we all have. These are challenges that we want to field to continue to advise us on.

Signs of Progress: A Conference and Recent Writings

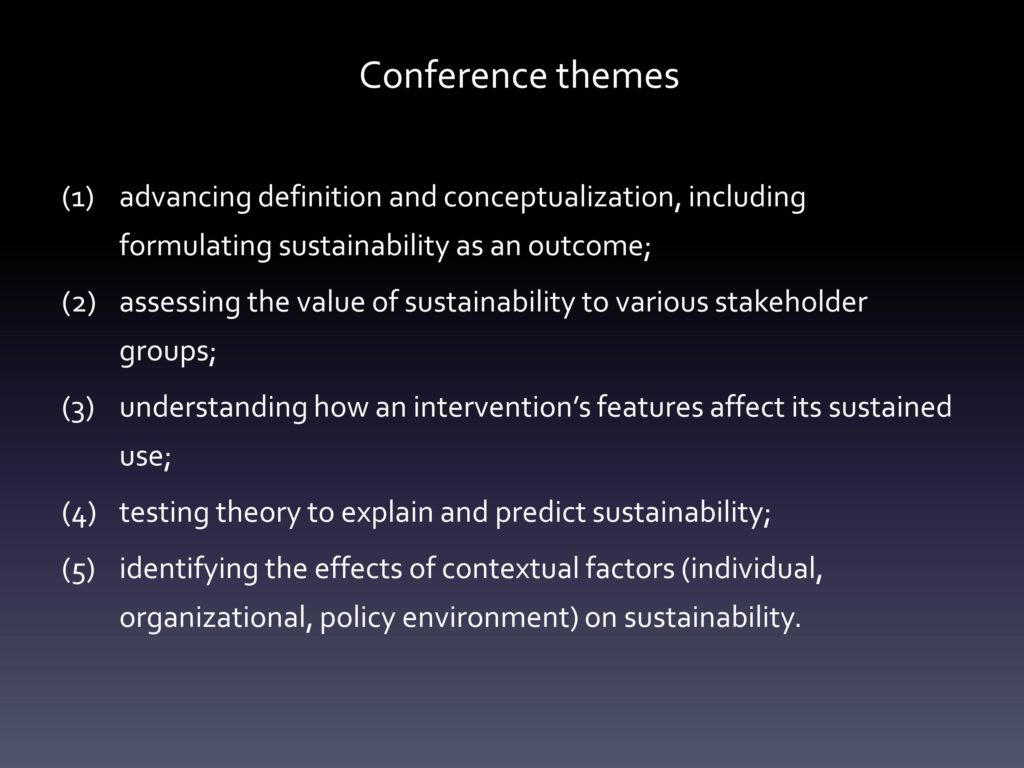

So there were a number of conference themes, which I think represent where we are as a field, to some degree the horizon.

-

Advancing the definition and conceptualization beyond a particular dictionary definition, to deal with all the nuances and challenges of the field.

-

Thinking about sustainability really being valued in different ways by different stakeholder groups — many of which were represented during those couple of days.

-

Thinking about an intervention’s features. How an intervention is ultimately going to affect whether it is going to be sustained. Can we inform better intervention design to that end?

-

Thinking a lot about what theories are available, or should be created that specifically explain, and ideally are going to help us predict, sustainability.

-

And then thinking a little bit more in an expanded way about contextual factors at different levels of the system that are going to impact sustainability.

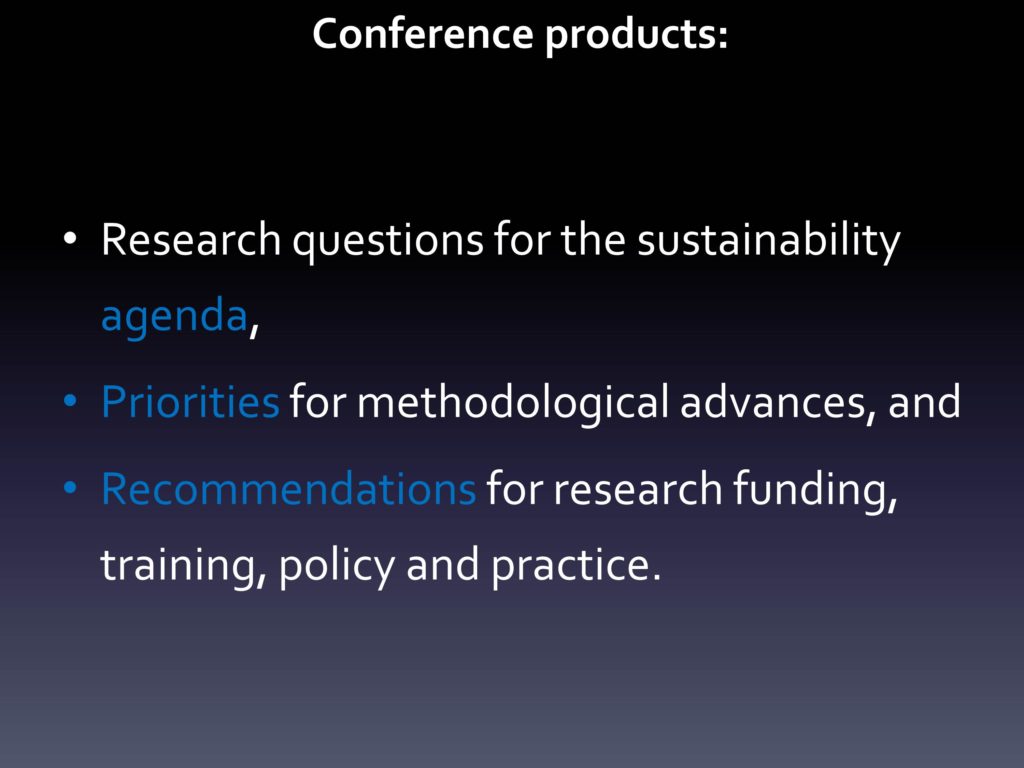

There were some particular research questions, and I would submit that these research questions have not fully been answered. They are things we would love to see — at least from the NIH standpoint — people investigating a little bit more.

-

What is the value of sustainability? How can we articulate, not only in terms of reach but potential ongoing health benefits, maybe not just for the individual, but for populations over generations, etc.

-

Thinking a lot about how different interventions differ in sustainability, again depending on how they are designed or how they are implemented.

-

A lot more about this bi-play between sustainability, fidelity — tri-play, I guess, if you throw in adaptation. What are the factors that are triggering adaptation? Different things within the system that might make it harder to sustain something in a particular route.

-

Again, can we have — you heard a couple of times, the review that we did around implementation, of different frameworks and models, the 109 that became 61 that became hopefully a helpful resource, didn’t specifically focus on sustainability. So how can we get there on that?

-

Then much more research. Observational was called for, as well as prospective studies to try to identify how contextual factors affect sustainability.

We’ve also had a number of people who I think have been expanding the terrain of what we think about in terms of sustainability.

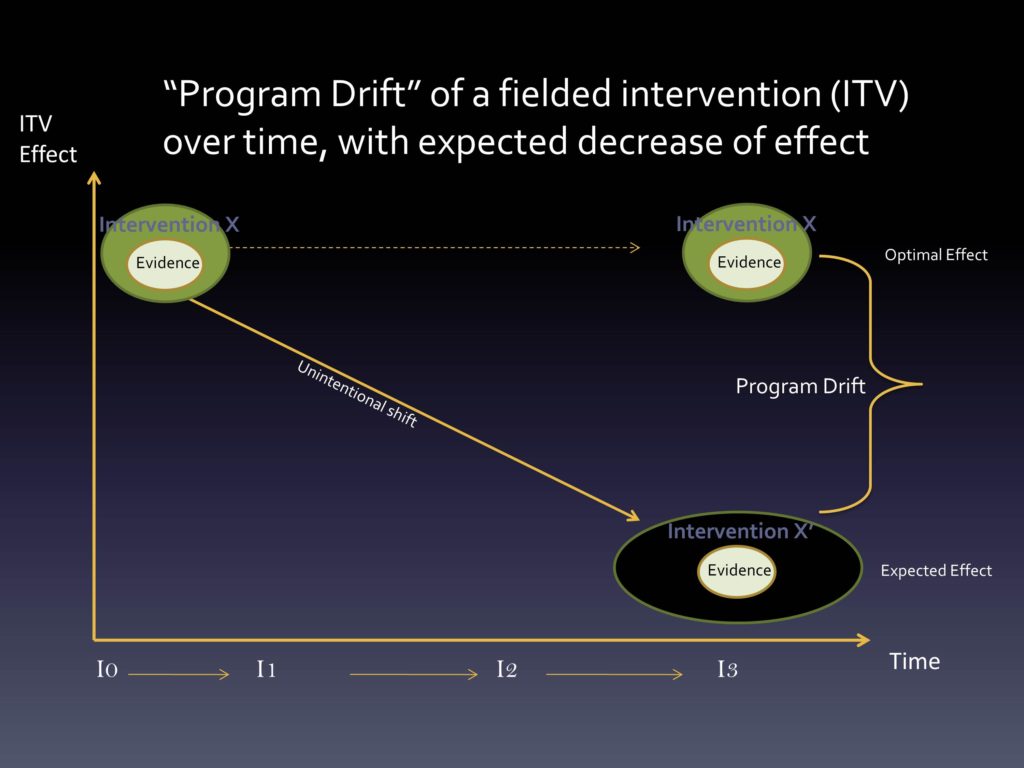

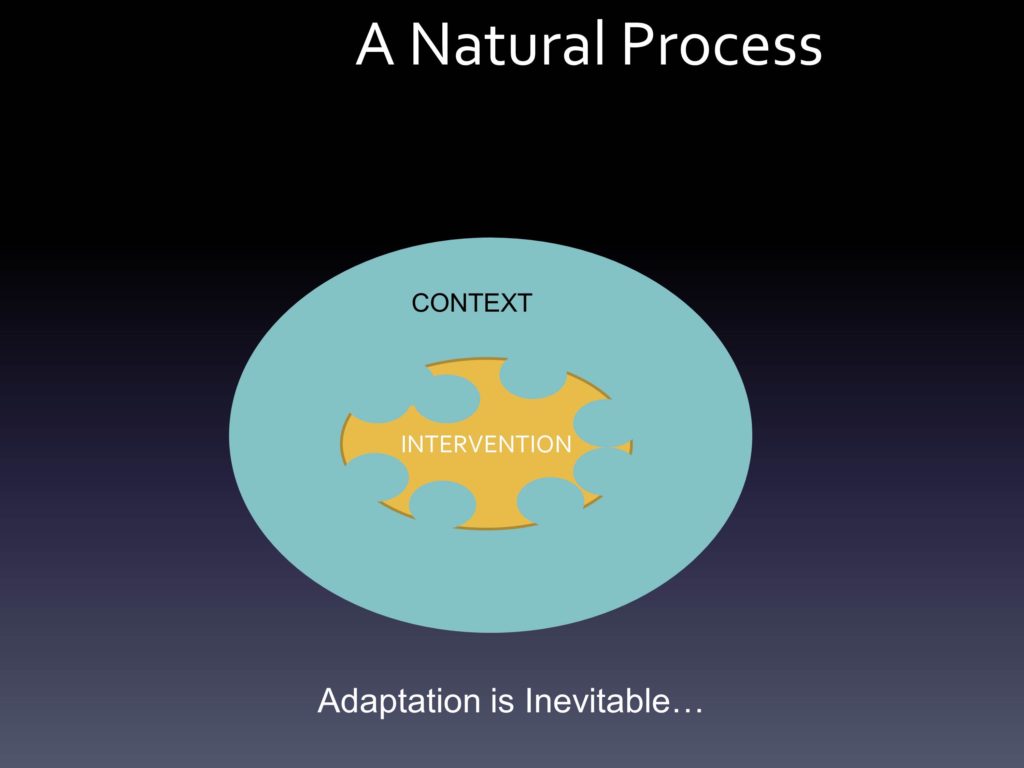

Mary Ann Scheirer and Jim Dearing have been two of the key ones who have said it’s not enough to say that something in its original form will likely be able to be sustained forever. Adaptation to some degree is essential to sustainability.

The organizational assessment, or the context, or the fit of that intervention is going to be key.

Some folks have talked about how they see this very important process of trying to look at long-term success of an intervention occurring somewhere between that initial implementation and that broader sustainability phase.

The idea that outcomes assessment — Greg had mentioned this — that feedback can help inform sustainability or even, as was discussed before, whether things have outlived their usefulness.

And then they also argue that capacity building is necessary for success, the memo-driven or publication-driven solution is probably not going to do so well.

Reasons for Optimism

I think at this point in time, today, we have a lot of reason for optimism. There are more and more opportunities to look at sustainability, where we’ve had people who have very systematically tried to understand that initial implementation phase, and can now do — in the same way that prevention trialists had done that follow up of the population to see how did that original intervention ultimately lead to better outcomes down the road. Same thing here that initial implementation effort might enable us to look down the road at what’s going on.

We’re developing measures. The stages of implementation completion, the sustainability planning tool, which also at Wash U, Doug Luke, has been pulling together that may be a pragmatic tool for folks to use to look at planning for sustainability.

We have improved data. Improved ability of technology advances to track more data over time.

And I think we’ve seen — like Maryann and Jim and others — these emerging commentaries and theories that are specifically related to sustainability rather than, as I said, that sort of last little paragraph that’s either hopeful, saying, “We think this will work down the road.” Or, “When we left, it looked like things were still going well.” We’re excited to see that there’s more beyond that.

We have a number of ongoing studies. These are just a few that are NIMH-supported. But that are specifically focusing on that latter stage beyond that initial implementation. Greg had mentioned the multi-dimensional treatment foster care study that Patti Chamberlain has been involved in.

Lisa Saldana, is the one who created — and is now testing more broadly — the stages of implementation completion that gets you all the way through this longer-term sustainability.

Joan Cook and Greg Aarons are both doing follow-ups to their initial implementation studies where they are now looking at sustained use of evidence-based practices. Joan is looking at it for PTSD within VA. And Greg, of course, has been looking at it in terms of the SafeCare intervention down the road in Oklahoma and California.

Towards a Dynamic View of Sustainability

So here’s where I want to depart a little bit, and I hope to see at least more thinking — whether it is arguments or some agreement — and being more explicit rather than continuing this, “That sounds right, and let’s never talk about it again.”

Really, what do we do if we try to not hold anything constant? If we try to make sure that actual change is at the center of things?

Evidence from Dissemination and Implementation

So this starts from evidence from the D&I field, where we know that service systems, the contexts are always changing.

We know whether we like it or not, whether we acknowledge it or not, evidence-based practices are being adapted all the time in many different ways.

We know intuitively, although sometimes it gets lost in designs, that consumers, patients, providers, systems vary dramatically.

We know that evidence-based practices are rarely one-size-fits all.

Implementation is a process. Not a light switch — where before it was dark, then there was implementation.

And we also know that in not all cases does fidelity equal an optimal outcome. There’s variation in terms of that. And because there’s variation, some of us believe we need to think, as Larry had said, a little differently about this potential paradox — but try to find some happy medium down the road where we’re trying to drive toward optimal care.

Assumptions and Questions

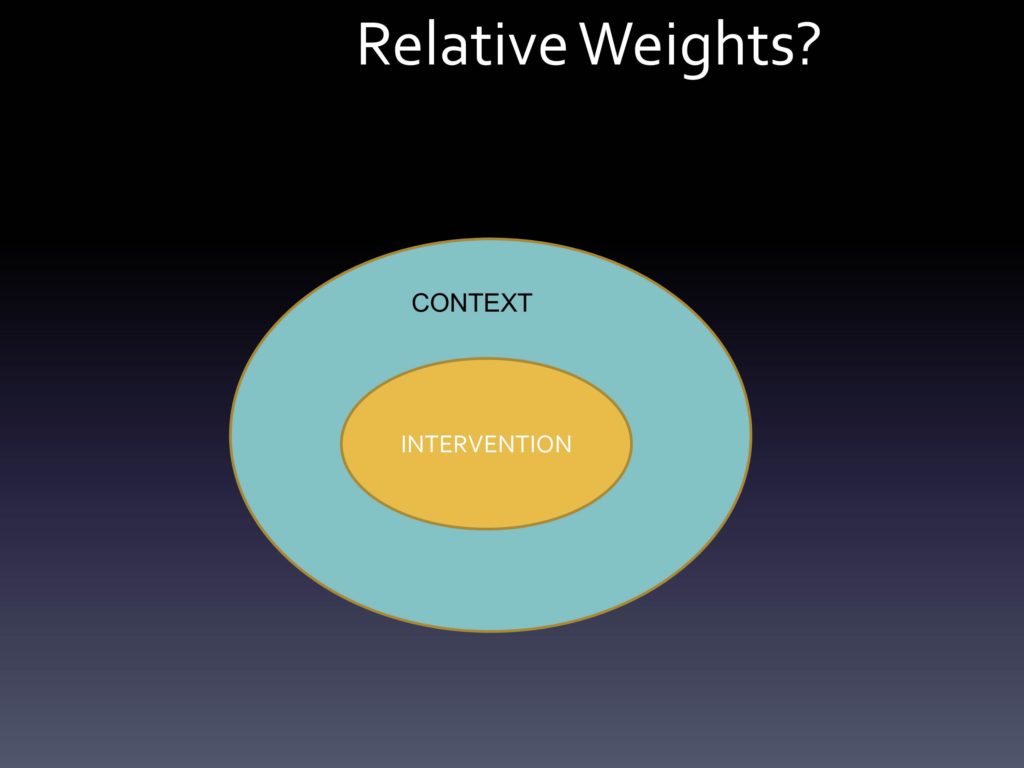

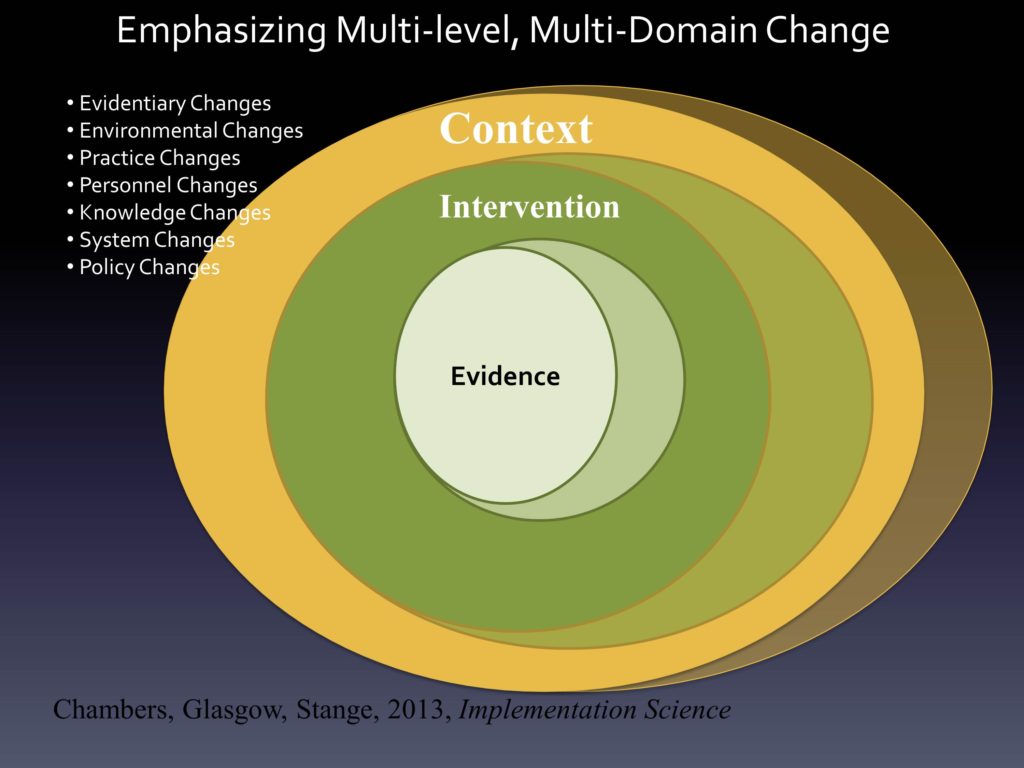

Multi-Level, Multi-Domain Change and Managing Intervention/Context Fit

The other thing that we think about with our evidence within an intervention, within a context, is that things are always evolving. We should expect that we’re learning more and more about the evidence base. We should certainly expect — as has been identified — that adaptation, changing of the intervention is always occurring, and shouldn’t it be? Unless we truly feel that from the beginning we got it right, that would be amazing. But the iPhone begat the iPhone 2, the 3, the 4, the 5, the 5S, the 5C — things change over time because of a belief that maybe things can get better.

Certainly, even though a lot of our studies do the contextual assessment on the front-end, some of them are now looking at context over time. We know context is changing, and we lose a lot if we hold onto that assumption that once we’ve mapped out the context in any setting, that we’re good to go forever. That nothing like, say, a big national healthcare reform financing kind of thing is going to get in the way or other sorts of changes. Far be it there are other ways — maybe better ways — of delivering care over time.

We want to see that more and more these changes that we fully expect to happen if we think intuitively, are reflected in our thinking about how best to study them. Whether they are changes in the evidence base, the environment, the practice.

People, we know turnover — Greg has studied this a lot — how challenging it is, of course. People get trained and then they’re only going to be in that job 8, 9 months or less than that. We talked a lot with mental health commissioners, state commissioners of mental health, finding that the average lifespan of a commissioner in that job was 18 months. And that reflected those who had been there 15, 20 years — along with those who said, “Hey, we’re here, we’re ready to get things done. Oh, there’s another job.”

We know these changes are going on, but we rarely think about them as core elements of our study. And we want to. So it’s on multiple levels and it’s multiple domains that we’re trying to, ideally, account for and even sort of listen for and adapt to change as it’s existing.

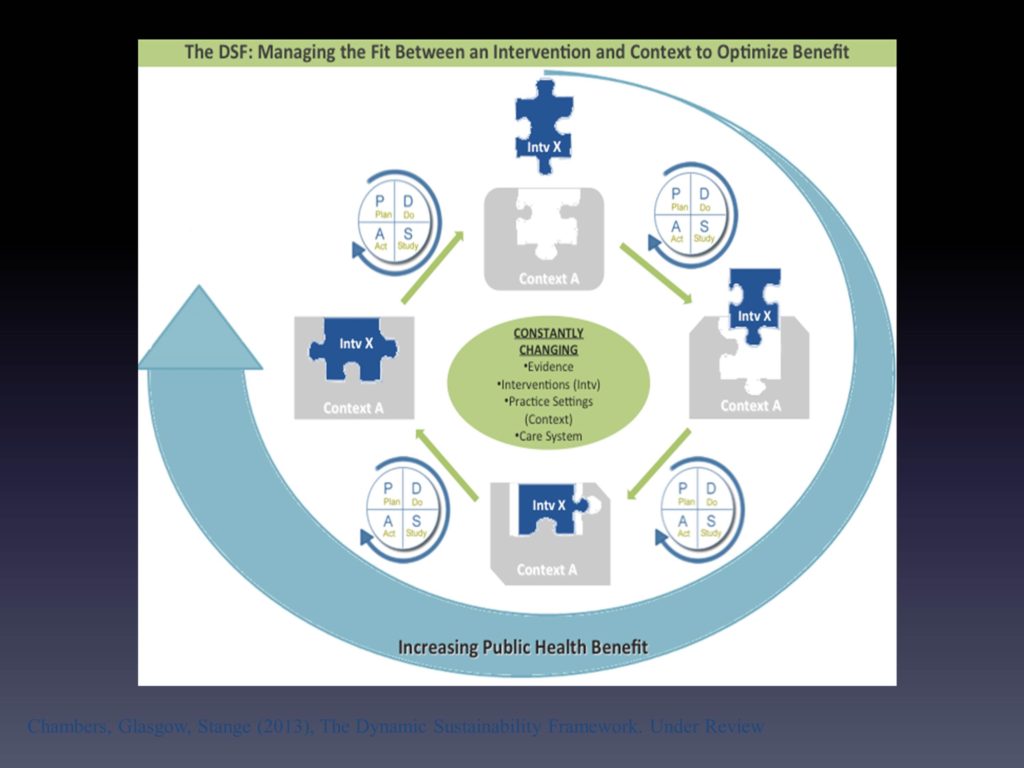

What we had pulled together — myself and Russ Glasgow and Kurt Stange — we had tried to think about a way in which we can reflect what we see as this dynamic process. It’s by no means perfect. If it was perfect — well it will never be perfect — but if it was a little bit more accurate, what you would see is this three-dimensional orb of things colliding and changing shape and all of that.

The best that we could try and do is say, look at the puzzle piece, which is that intervention. Over time, we’re fully expecting that it’s going to change. And ideally it’s changing in a way that makes it a better fit with the context, which also by the change of the shape slightly is changing every time.

We saw the idea, for planning for and trying to respond to this change, borrowing from the quality improvement literature, that’s where you see the planned new study at — that’s a short hand for whatever process you can systematically go through to look at that fit between the intervention and that context over time. Ideally ways to not just say, “how do we move the context around to keep our intervention the way it always looked” but ways in which can learn to ultimately try and improve the overall benefit.

You’ll see this overarching circle is our way of trying to say that we truly believe that if you’re sensitive to this fit over time, and it’s done thoughtfully in terms of gathering this additional evidence at these additional levels, ideally you should be able to do much, much better than you ever did from the beginning where you were really just trying to see how might this work in this new setting having not been there before.

We do see this as an evolving space, but ideally it’s a way to try and incorporate this notion of ongoing learning, of ongoing improvement and not just at the organizational level — but subjecting our interventions to the same kind of questions that we often reserve for our contexts, I guess, or reserve for the noise that we’re trying to clear out.

Final Thoughts

I do think there are a number of areas ripe for exploration across medicine and certainly in your area as well.

I think that we can look much more at sustainability of evidence based practices as they are being delivered as big changes in the healthcare context are occurring.

That we can look a lot more at adaptability and even evolution of evidence based practices over time. If we assume that adaptation is not necessarily bad.

I think we have increasing opportunities to think about scaling up practices, where they are being delivered across health plans, across systems, across networks.

Hendricks Brown and his Center was referenced before, but adaptive designs are one of the things we’ve been thinking about methodologically — or, can you think about implementation as this sort of step-wise approach where new challenges are occurring, new questions that might lend themselves to different options. It might be very nicely contributing through adaptive designs in this space.

Real-time feedback and monitoring is another way we think we can learn a lot more.

And very importantly, studying de-implementation or ex-novation. This idea that maybe we should also be focusing on those practices that may even be evidence based, may not be evidence based, but are clearly not good fits for the service systems and the practitioners — and the client populations — that’s trying to deliver them.

We do have this notion of dynamism. We have these key components that bring us further along than we were before.

We have outcomes management systems, and the technology in place to be able to look in less and less invasive ways that we can see are our clients, our patients, our consumers — however we term the people we are trying to reach — getting better.

We certainly have more and more scope, as some of the investigators in this room have already shown, in looking at quality measurement over time. Is the intervention being delivered in a high-quality way?

We have more and more opportunity and resources to think about monitoring the adaptation. How is the delivery of the intervention changing, within settings and across settings.

And we have more and more opportunity with data sets to look at how organizations are changing over time.

Our argument is that if we look at these various sources of data, ideally we’ll have a much better understanding of that particular fit between the intervention and context that we see as so incredibly important.

The take home messages from this.

We had the recent meeting, which I think there will be more resources on as it comes. We now do have assessment tools that are able to bridge into sustainability that I think are going to be helpful.

I will say that I though our traditional view of sustainability, starting from that notion of “to keep preserved in that original state” may be limited, this is a tremendous opportunity for further research. We need to understand more about the time frame.

We really need to think more thoughtfully about this notion of fidelity versus adaptation and, as I have heard Kurt Stange and several of us talk about, evolution of interventions over time. We all — or many of us — believe in evolution in other domains. So why shouldn’t we believe that our interventions can evolve as well, and ideally through natural selection the better ones are maintained. And that we think this ultimately will optimize the effect of the intervention.

You’ll see a few references that are suggested, and please contribute more. This really is an area where there are relatively few empirical studies. There are some growing commentaries and theoretical pieces, but there is great, great scope for helping us all to think about this.

Questions and Discussion

Question: What does it mean to deliver an intervention in a high quality way, i.e., being fit to the context and to the fidelity to the theory and mechanism of action?

Question: In thinking about dynamism and sustainability, to what extent does the patient’s life — maybe there’s an addiction, or maybe something not quite as salient — how does that impact your model?

That’s right on from where we’re thinking. The traditional way to study primary care — depression, is what’s mostly studied in primary care — is to assume there are no other problems going on. So you found the very rare population only struggling with this one problem, and then you deliver your intervention and you say hey, it looks like we can make a difference.

And then, of course, came the implementation challenge. All of a sudden the average patient in my clinic has five or more chronic conditions that we’re trying to deal with.

As we’re thinking about the fit, it’s not just fit within a setting, but how you’re accommodating with patient preferences, even when someone is struggling on that given day. It’s crucial, and it will push us into a much better place to not assume someone is here for what I want them to do.

It’s a key component that’s often missed, in the same way that as researchers we assume all clients look the same, and they all come in waiting for what we’re ready to implement.